Using Kaniko in Actions-Runner-Controller

One of the most common business needs I hear concerns about for actions-runner-controller and security policy is how to build containers without Docker-in-Docker and privileged pods. It seems to come up in every conversation I have about self-hosted compute for GitHub Actions - so much so I have a “canned reply” for any emails/issues/discussions asking me about it and I’ve written blog posts and given conference talks about the many ways to address the concern.

Check out reducing the CVEs in your custom images to further secure your container builds. The Kaniko executor is one of many things that can be done on low-to-zero CVE images.

The problem with building containers in Kubernetes is that building a container normally relies on having interactive access to Docker/Podman/etc. and these usually require root access on your machine to run. Even rootless Docker still needs a privileged pod to work with seccomp and mount procfs and sysfs - so while it’s possible to remove sudo and run the Docker daemon without root, it still requires --privileged to run.1

I had assumed there was some publicly discoverable code combining a container builder in GitHub Actions with actions-runner-controller. I was wrong - let’s fix that. 🙊

Here’s how to use Google’s Kaniko to build containers in actions-runner-controller without privileged pods or Docker-in-Docker.

Storage setup

In order to run a container within actions-runner-controller, we’ll need to use the runner with k8s jobs and container hooks 2, which allows the runner pod to dynamically spin up other containers instead of trying to use the default Docker socket. This means a new set of runners and a new persistent volume to back it up - full manifest file for both our test and production namespaces, if you want to jump ahead.

Create the storage class with the following manifest:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: k8s-mode

namespace: test-runners # just showing the test namespace

provisioner: file.csi.azure.com # change this to your provisioner

allowVolumeExpansion: true # probably not strictly necessary

reclaimPolicy: Delete

mountOptions:

- dir_mode=0777 # this mounts at a directory needing this

- file_mode=0777

- uid=1001 # match your pod's user id, this is for actions/actions-runner

- gid=1001

- mfsymlinks

- cache=strict

- actimeo=30

Now give it a persistent volume claim:

1

2

3

4

5

6

7

8

9

10

11

12

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-k8s-cache-pvc

namespace: test-runners

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: k8s-mode # we'll need this in the runner Helm chart

And apply it with a quick kubectl apply -f k8s-storage.yml to move forward.

Deploy the runners

Now, we need runners that have the container hooks installed and can run jobs natively in Kubernetes. The pre-built Actions runner image (Dockerfile ) does all of this perfectly and is maintained by GitHub, so that’s what we’ll use here. The finished chart is here .

Let’s make a quick Helm chart:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

template:

spec:

initContainers: # needed to set permissions to use the PVC

- name: kube-init

image: ghcr.io/actions/actions-runner:latest

command: ["sudo", "chown", "-R", "runner:runner", "/home/runner/_work"]

volumeMounts:

- name: work

mountPath: /home/runner/_work

containers:

- name: runner

image: ghcr.io/actions/actions-runner:latest

command: ["/home/runner/run.sh"]

env:

- name: ACTIONS_RUNNER_CONTAINER_HOOKS

value: /home/runner/k8s/index.js

- name: ACTIONS_RUNNER_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: ACTIONS_RUNNER_REQUIRE_JOB_CONTAINER

value: "false" # allow non-container steps, makes life easier

volumeMounts:

- name: work

mountPath: /home/runner/_work

containerMode:

type: "kubernetes"

kubernetesModeWorkVolumeClaim:

accessModes: ["ReadWriteOnce"]

storageClassName: "k8s-mode"

resources:

requests:

storage: 1Gi

And apply it!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

$ helm install kaniko-worker \

--namespace "test-runners" \

-f helm-kaniko.yml \

oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set \

--version 0.10.1

Pulled: ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set:0.10.1

Digest: sha256:fdde49f5b67513f86e9ae5d47732c94a92f28facde1d5689474f968afeb212ef

NAME: kaniko-worker

LAST DEPLOYED: Wed Dec 18 10:35:23 2024

NAMESPACE: ghec-runners

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing gha-runner-scale-set.

Your release is named kaniko-worker.

Write the workflow

Now that we have runners that can accept container tasks natively within Kubernetes, let’s build and push a container! (full workflow file , if you want to jump ahead)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

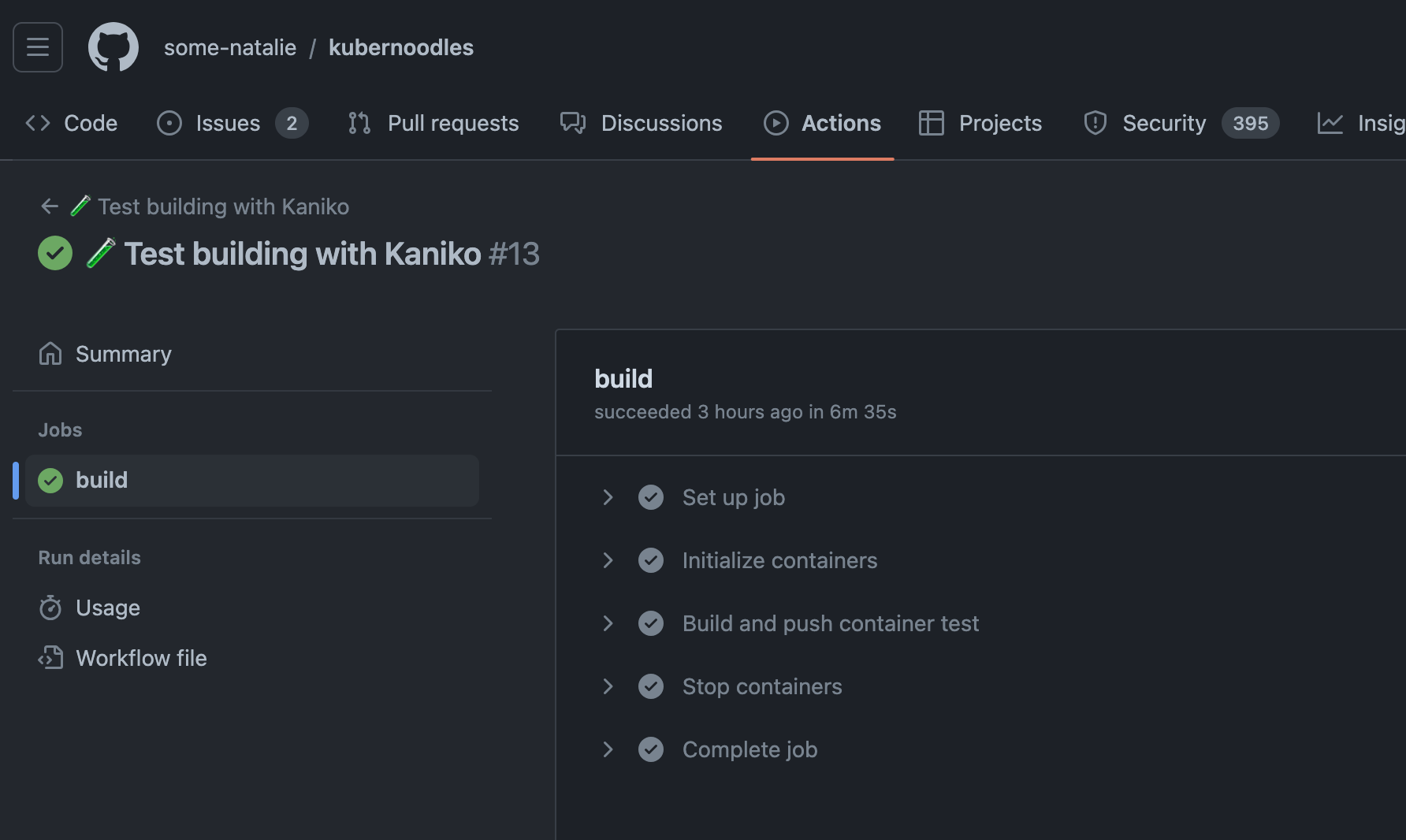

name: 🧪 Test building with Kaniko

on:

workflow_dispatch:

jobs:

build:

runs-on: [kaniko-worker] # our new runner set

container:

image: gcr.io/kaniko-project/executor:debug # the kaniko image

permissions:

contents: read # read the repository

packages: write # push to GHCR, omit if not pushing to GitHub's container registry

steps:

- name: Build and push container test

run: |

# Write config file, change to your destination registry

AUTH=$(echo -n ${{ github.actor }}:${{ secrets.GITHUB_TOKEN }} | base64)

echo "{\"auths\": {\"ghcr.io\": {\"auth\": \"${AUTH}\"}}}" > /kaniko/.docker/config.json

# Configure git

export GIT_USERNAME="kaniko-bot"

export GIT_PASSWORD="${{ secrets.GITHUB_TOKEN }}" # works for GHEC or GHES container registry

# Build and push (sub in your image, of course)

/kaniko/executor --dockerfile="./images/kaniko-build-test.Dockerfile" \

--context="${{ github.repositoryUrl }}#${{ github.ref }}#${{ github.sha }}" \

--destination="ghcr.io/some-natalie/kubernoodles/kaniko-build:test" \

--push-retry 5 \

--image-name-with-digest-file /workspace/image-digest.txt

In the workflow, I used the debug image as it provides more log output than the standard one and that’s my default as I build out new things. It shouldn’t be necessary once you’ve got a good workflow established.

Once the job is kicked off, it spins up another container, as shown below:

1

2

3

4

$ kubectl get pods -n "test-runners"

NAME READY STATUS RESTARTS AGE

kaniko-worker-n6bfm-runner-rfjhx 1/1 Running 0 108s

kaniko-worker-n6bfm-runner-rfjhx-workflow 1/1 Running 0 9s

Here’s our successful workflow run !

Full workflow logs and output of kubectl describe pod for the runner and the job containers are retained in the repository to prevent rotating out after 90 days.

Notes

One runtime flag to highlight is --label , which adds the OCI labels to the finished image instead of the LABEL directive in the Dockerfile. You may need to edit your Dockerfiles and workflow pipelines moving from other container build systems to make sure the final image has the correct labels. There are tons of other build flags and options in Kaniko, as well as many different ways to use it. Check out the project on GitHub for a more detailed outline on what flags are available. 📚

Kaniko certainly isn’t the only player in this space, but it’s the most common that I’ve come across. Here are a few others worth mentioning:

- Buildah in OpenShift

- Moby buildkit

- Orca-build - possibly unmaintained proof-of-concept?

In order to do this all internally, you’ll need to bring in or build a GitHub Actions runner that can run k8s jobs internally - the default one from GitHub (link ) works great here, but if that’s not alright, make sure to read the assumptions of custom runners here before installing the container hooks as outlined above. The Kaniko executor image and and all base images will need to be internal too.

Next - Build custom runners into GitHub Actions so it’ll build/test/deploy itself!

Footnotes

-

A discussion with lots of links on this here - Add rootless DinD runner by some-natalie · Pull Request #1644 · actions/actions-runner-controller ↩

-

The official documentation is well worth reading a few times over - Deploying runner scale sets with Actions Runner Controller - GitHub Enterprise Cloud Docs ↩