Signing and verifying multi-architecture containers with Sigstore

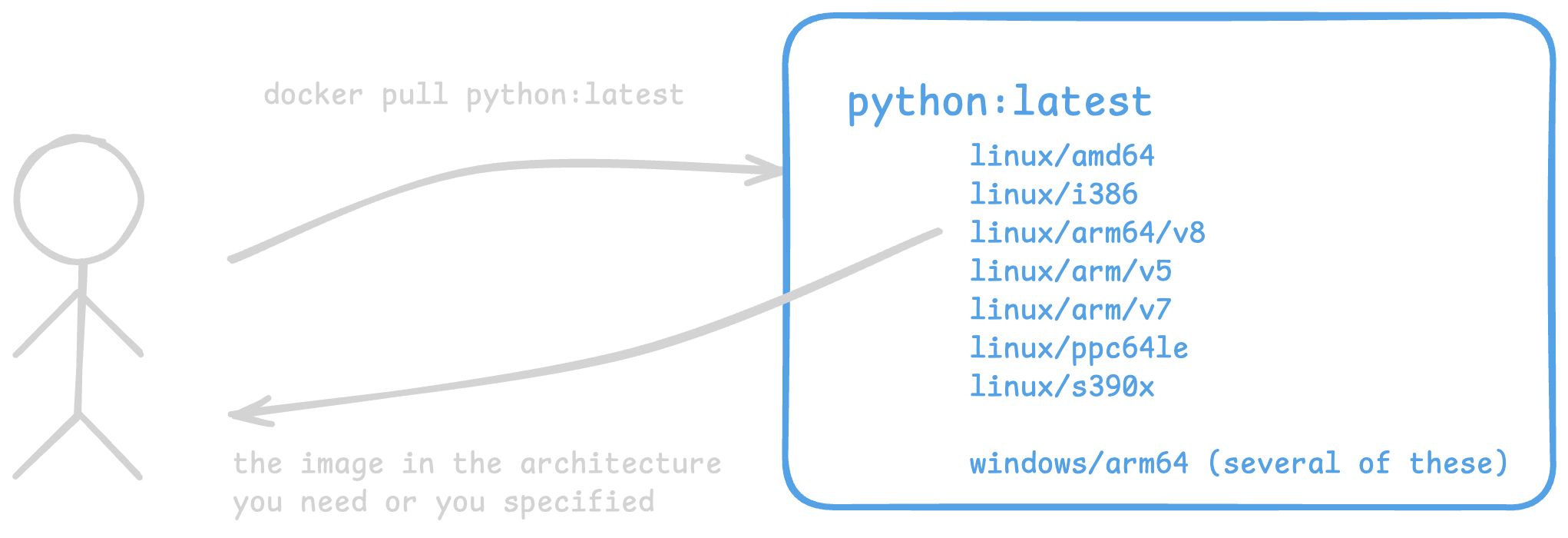

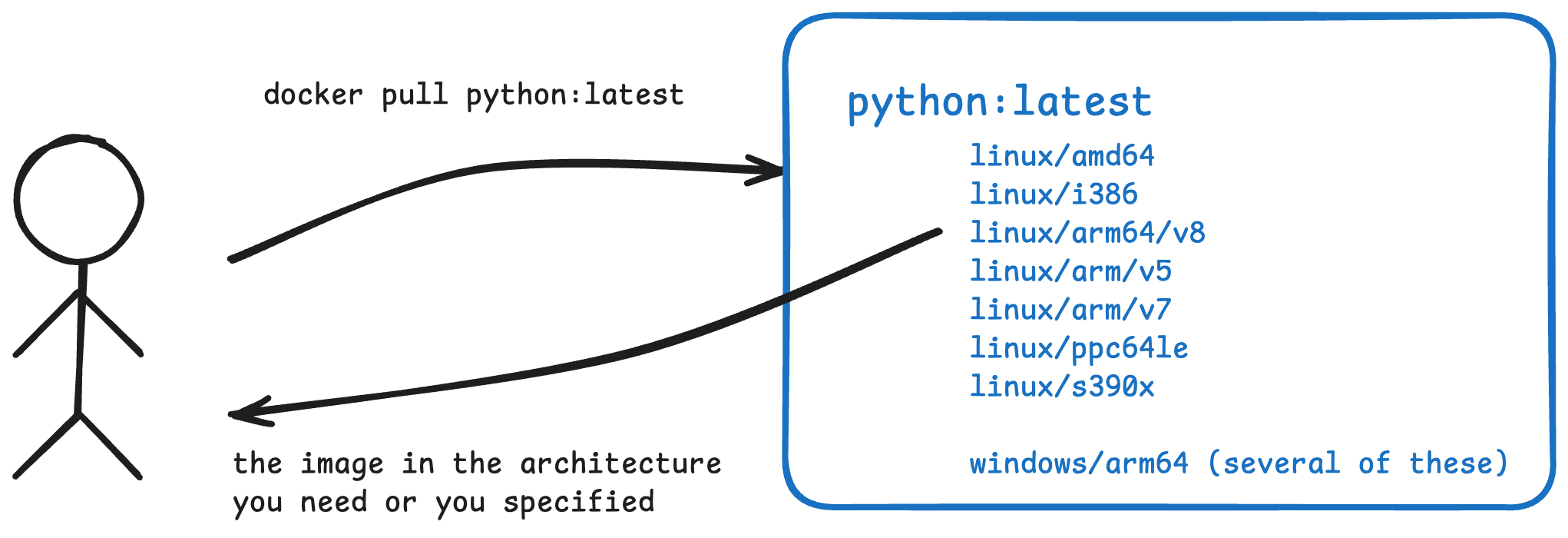

When you run docker pull image it 🪄 magically 🪄 pulls the right architecture. Right?

Hi, I’m Natalie - the technical lead for public sector at Chainguard. As noted earlier, we use Sigstore a lot! So much so that in order to see meaningful trends in the public good instance, you have to filter us out entirely. As a field engineer, this means I’m talking mostly with end users about what it is, how it works, what it can answer for you, how it fits into your organization’s threat model … and answering a ton of user friction points.

Here’s a handful of questions that I see often around this one big thing - how Sigstore works with multi-architecture containers.

This is a write-up of a presentation at OpenSSF Community Day NA 2025 on 26 June 2025 in Denver, CO.

🎥 YouTube recording if that’s more your speed

How did we get here?

I’ve been on an ARM Mac for over a year now, swapping or emulating other architectures as needed. Mostly, everything just works and this feels like magic still!

it feels like magic to not think about software architecture when you “just get the right image” without specifying

it feels like magic to not think about software architecture when you “just get the right image” without specifying

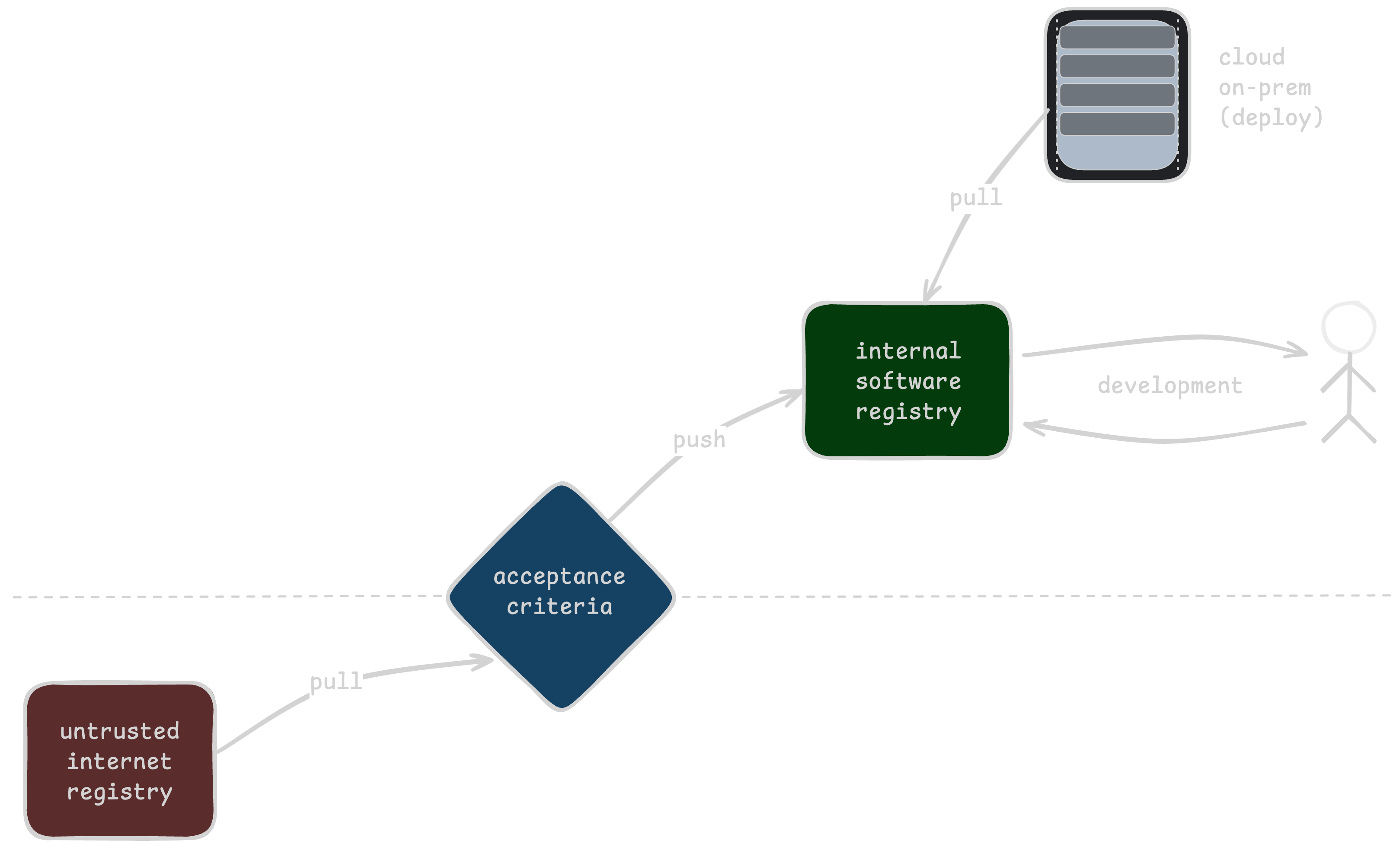

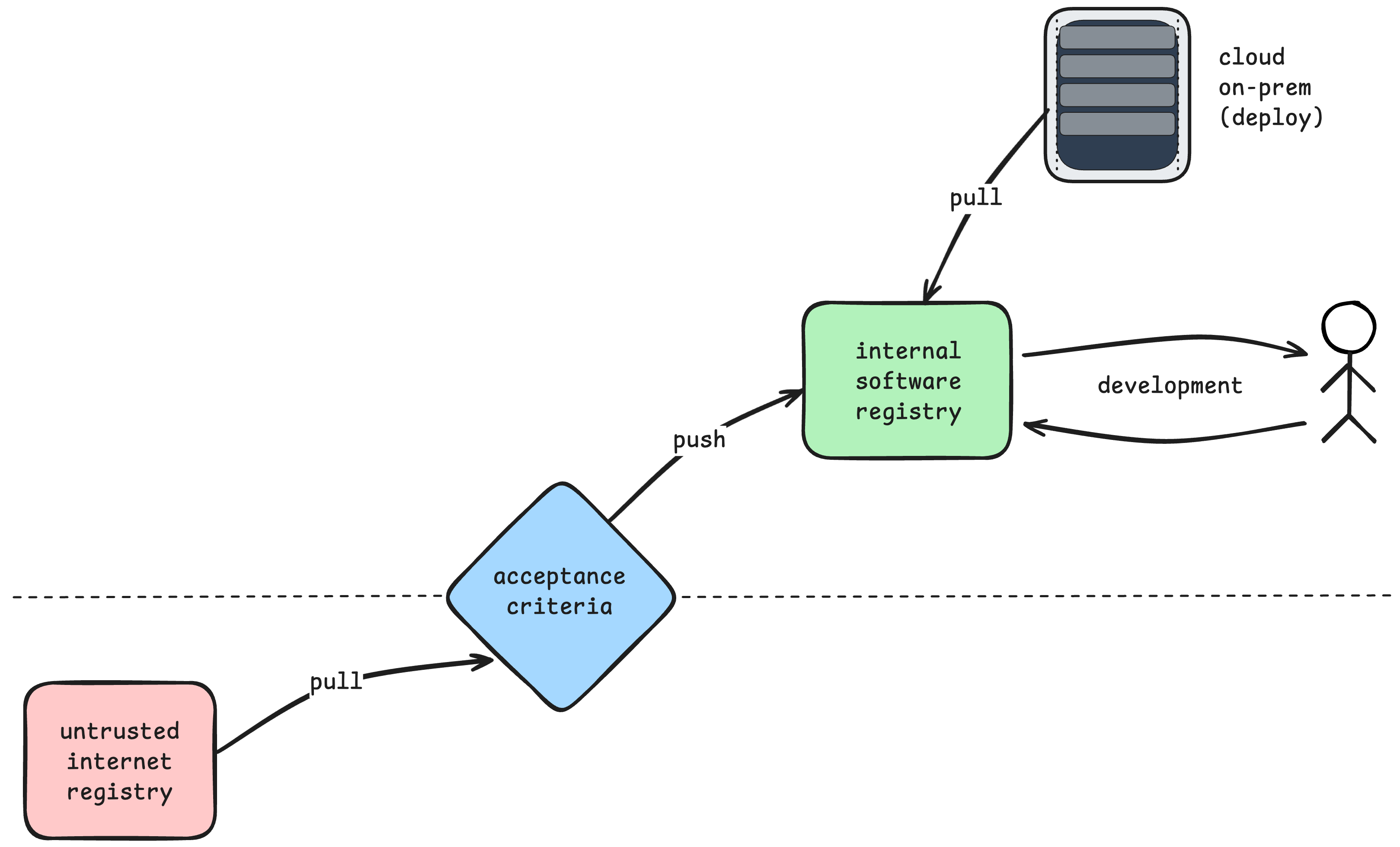

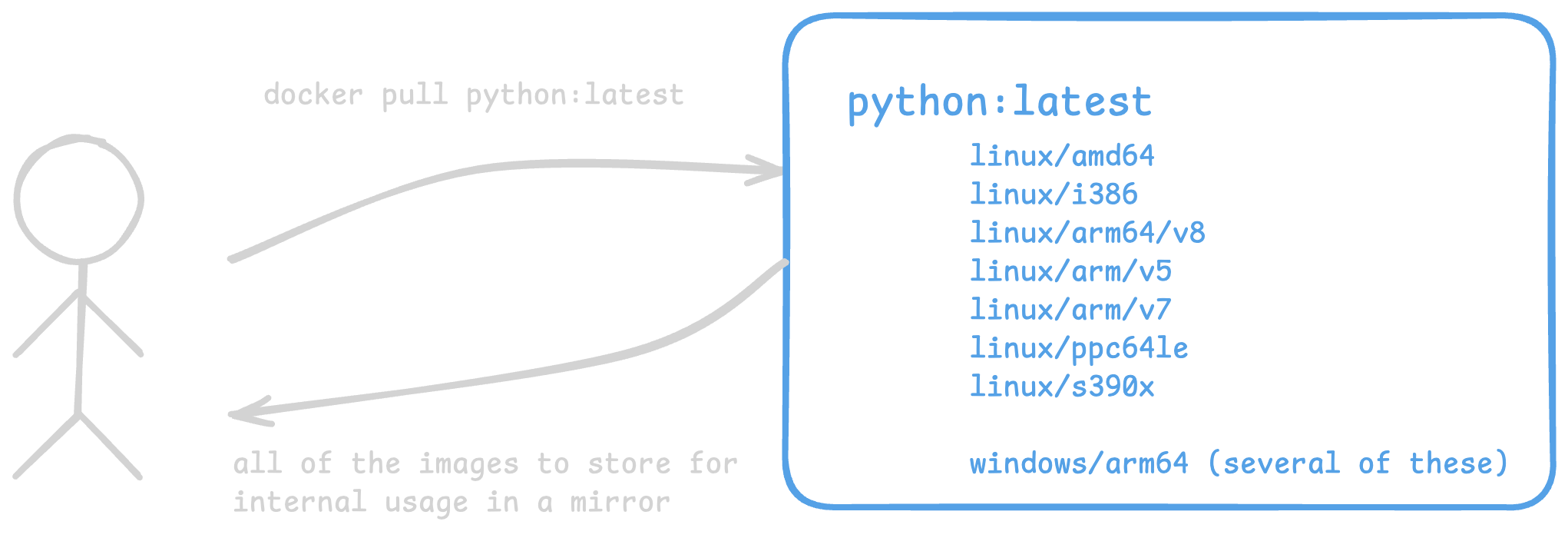

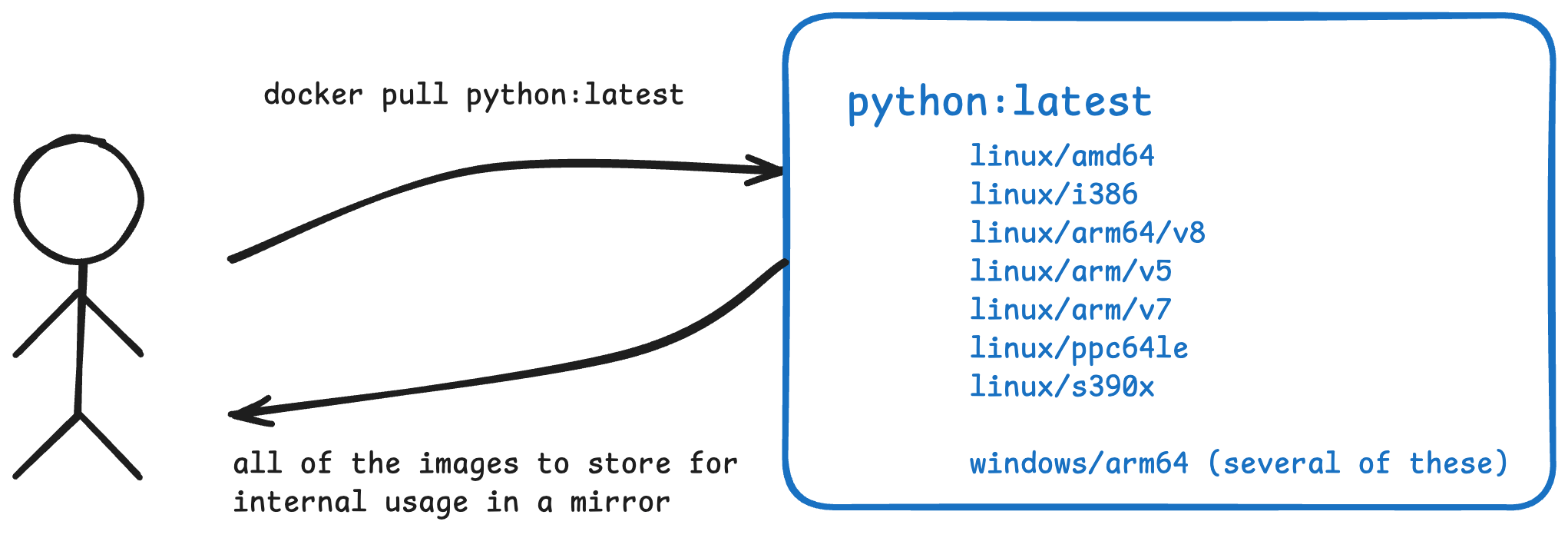

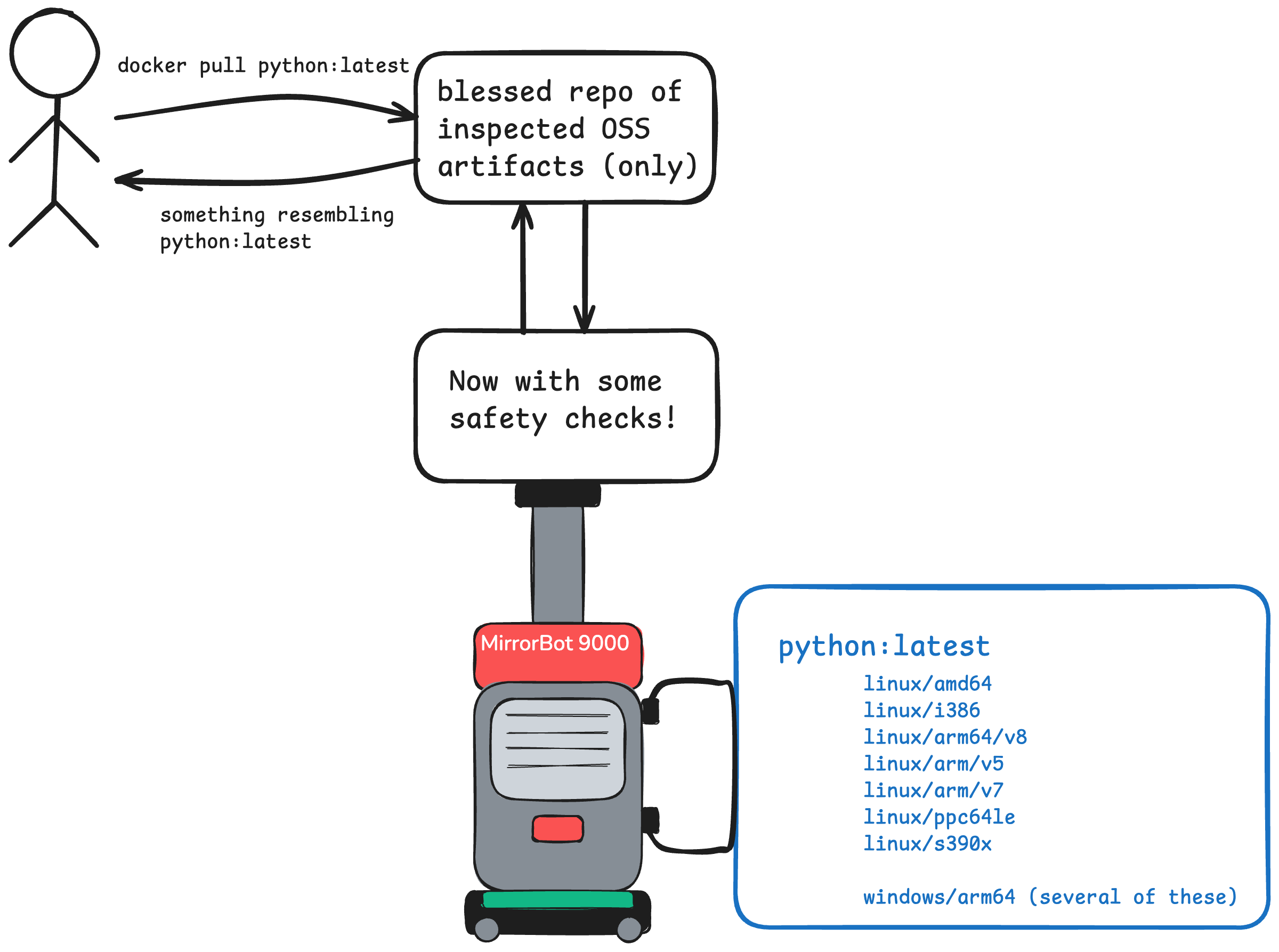

However, once you’re talking to a larger-scale organization, things change. First, they’ll typically want to keep things internal versus having everyone reach out to DockerHub (and get rate limited). To do that, things look more like this:

what most “mirrors” or “internal software factories” want is to pull all/most/more-than-one of these, though

what most “mirrors” or “internal software factories” want is to pull all/most/more-than-one of these, though

Now security gets involved, and suddenly there’s a few more “things” in between developers using the image and the upstream source somewhere on the internet. These things vary based on the company, the sensitivity to risk, the existing tools and vendors there, and probably a lot more. Common checks include:

- scanned (some container scanner)

- scanned again (if one scanner is good, two is better)

- verified to not have been tampered with (this is where Cosign comes in)

- build attestations (not as common, but ties a build to an artifact)

- software bill of material (SBOM) downloaded (increasingly common)

- sprinkled with ✨ magic compliance pixie dust ✨

- custom SSL certs

- logging

- other configuration

- … and so on …

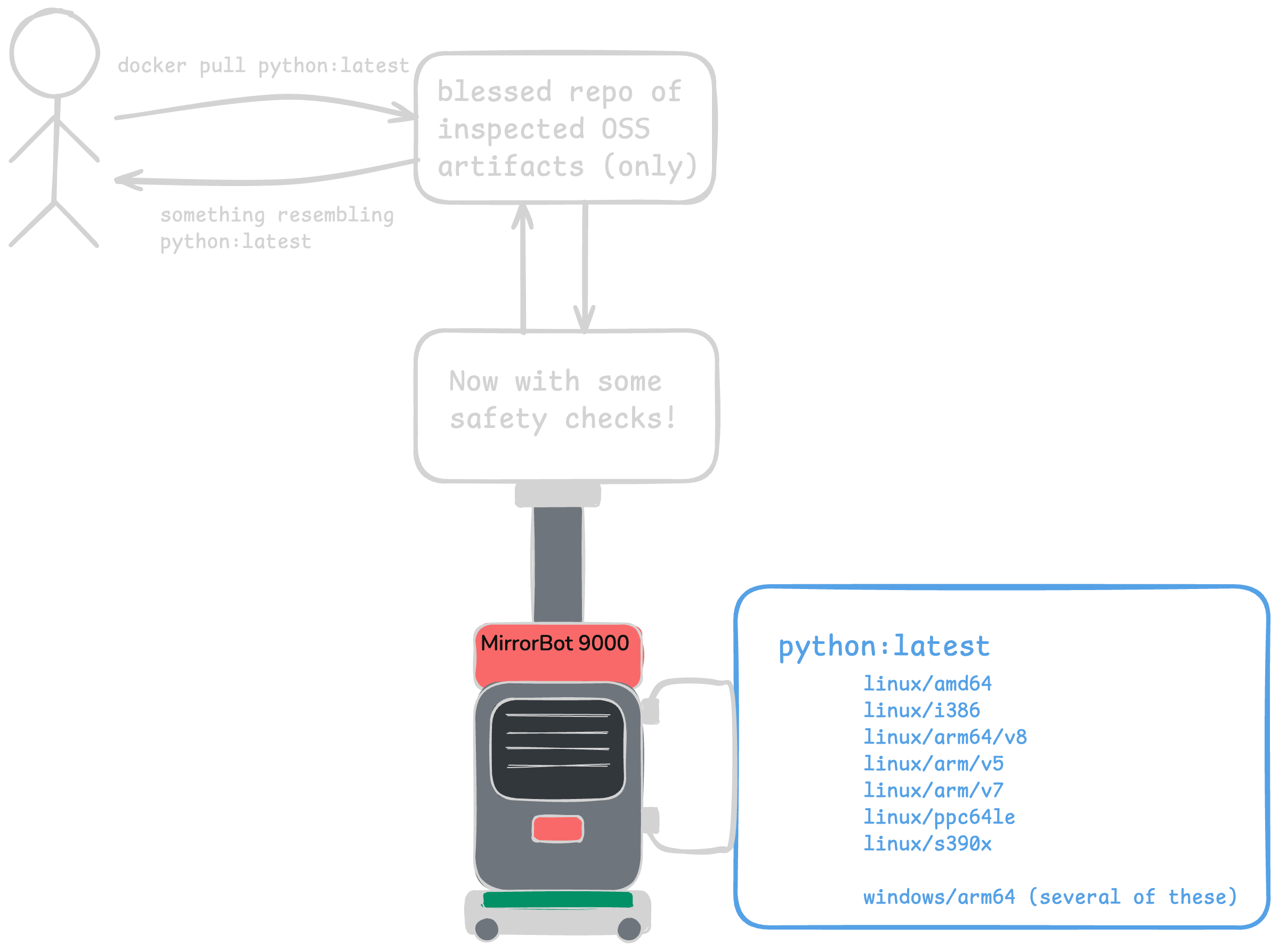

I call this the MirrorBot 9000. It could have been built in-house. Nowadays, it was probably purchased from some vendor who promised it’ll do everything. 🙄

Promises don’t alleviate the burden of responsibility to know how things work.

add in a random assortment of “safety checks” and you end up with something like this

add in a random assortment of “safety checks” and you end up with something like this

In a single-architecture world

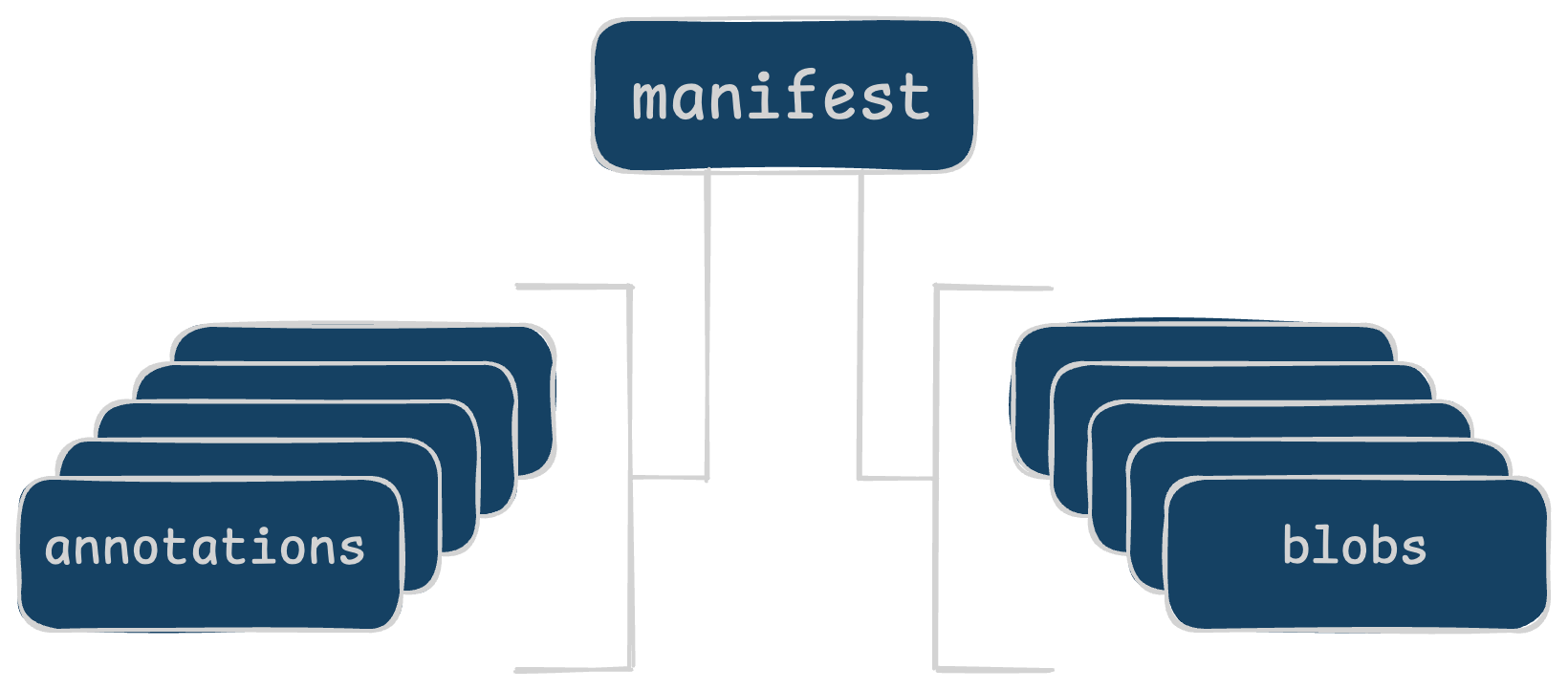

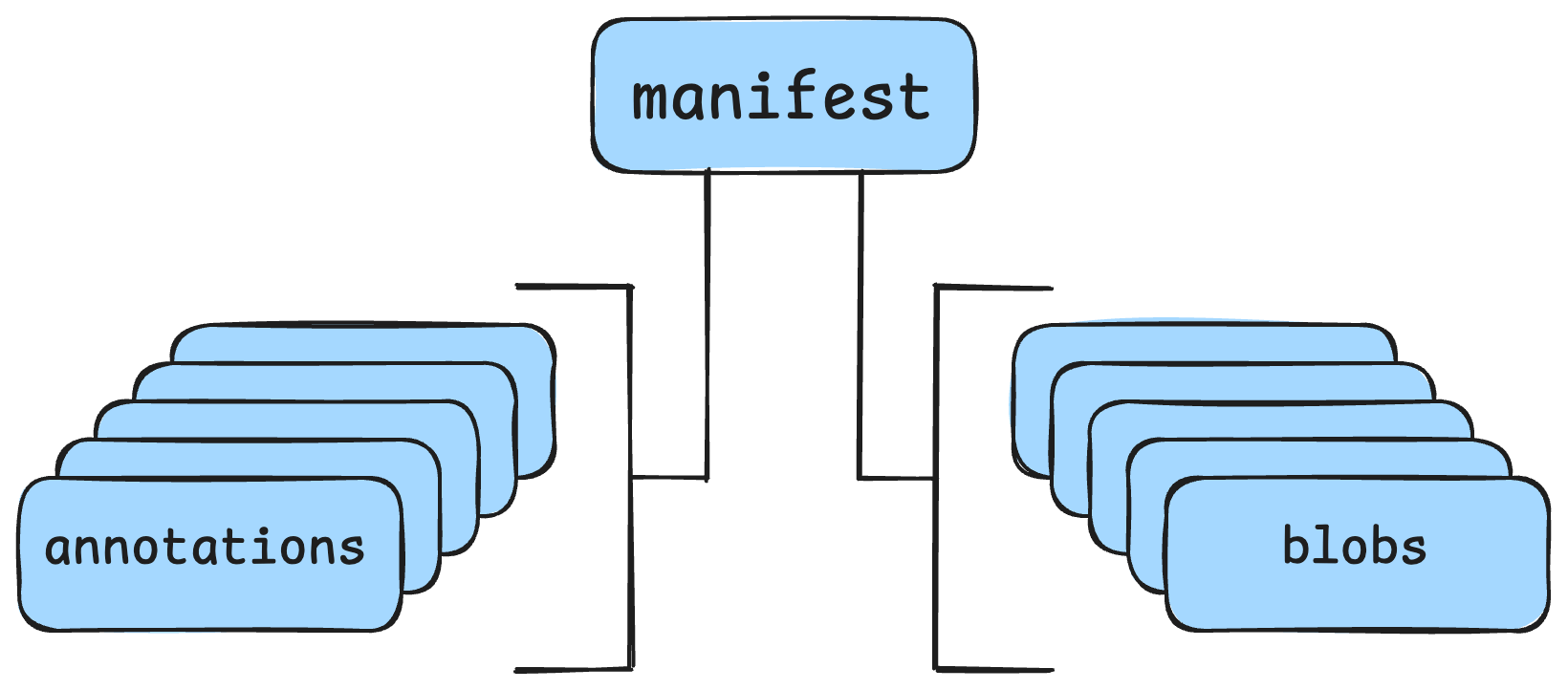

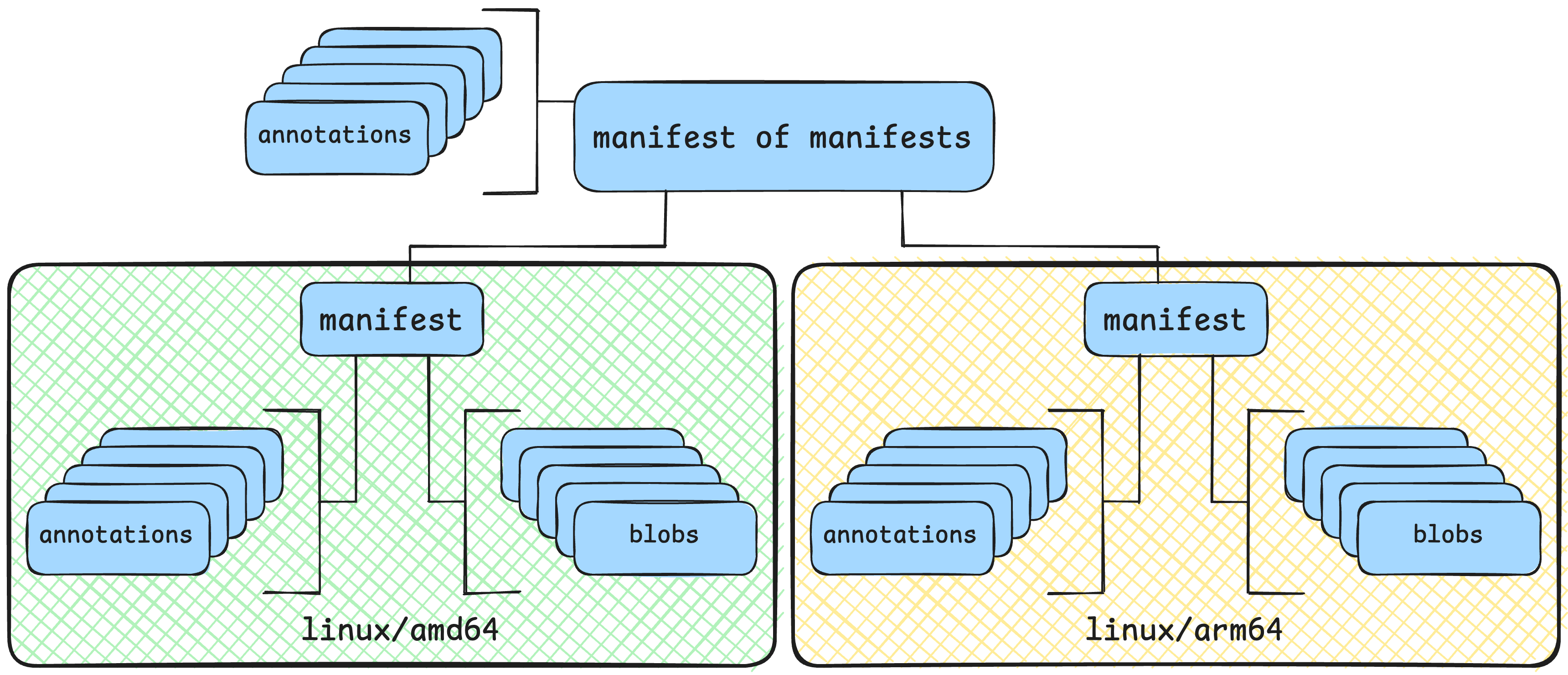

Cosign signs the image manifest. That manifest lists the layers of the image and the configuration and a few other things. The annotations can be many things like build attestations, signature bundles, SBOMs, and even custom data. Most anything you can shove into JSON can be added here.

If any of these things change, the manifest’s SHA256 sum would change and the verification would fail. This failure would alert everyone to something having changed after it was signed. Hooray!

As an architecture diagram, it looks more like this.

life is easy in a single-architecture world

life is easy in a single-architecture world

Taking a closer look, here’s what those SHA256 sums look like:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"config": {

"mediaType": "application/vnd.docker.container.image.v1+json",

"digest": "sha256:d688176b49d54a555e2baf2564f4d3bb589aa34666372bf3d00890a244004d02",

"size": 1424,

},

"layers": [

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"digest": "sha256:75e162d76c96dd343eab73f30015327ae2c71ece5cad2a40969933a8b3f89dbc",

"size": 5522209,

},

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"digest": "sha256:652eec62968485284a03eec733602e78ae728d5dc0bf6fcc7970e55c0dcb4848",

"size": 191,

},

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"digest": "sha256:ccbf00a6e1a0ff64cfaa5fc857932b4e22c4adee130ef5b64ecf8874650f9aad",

"size": 136,

},

],

}

All our home-grown shell scripts or vendored products to do this just worked!

More architectures, more problems

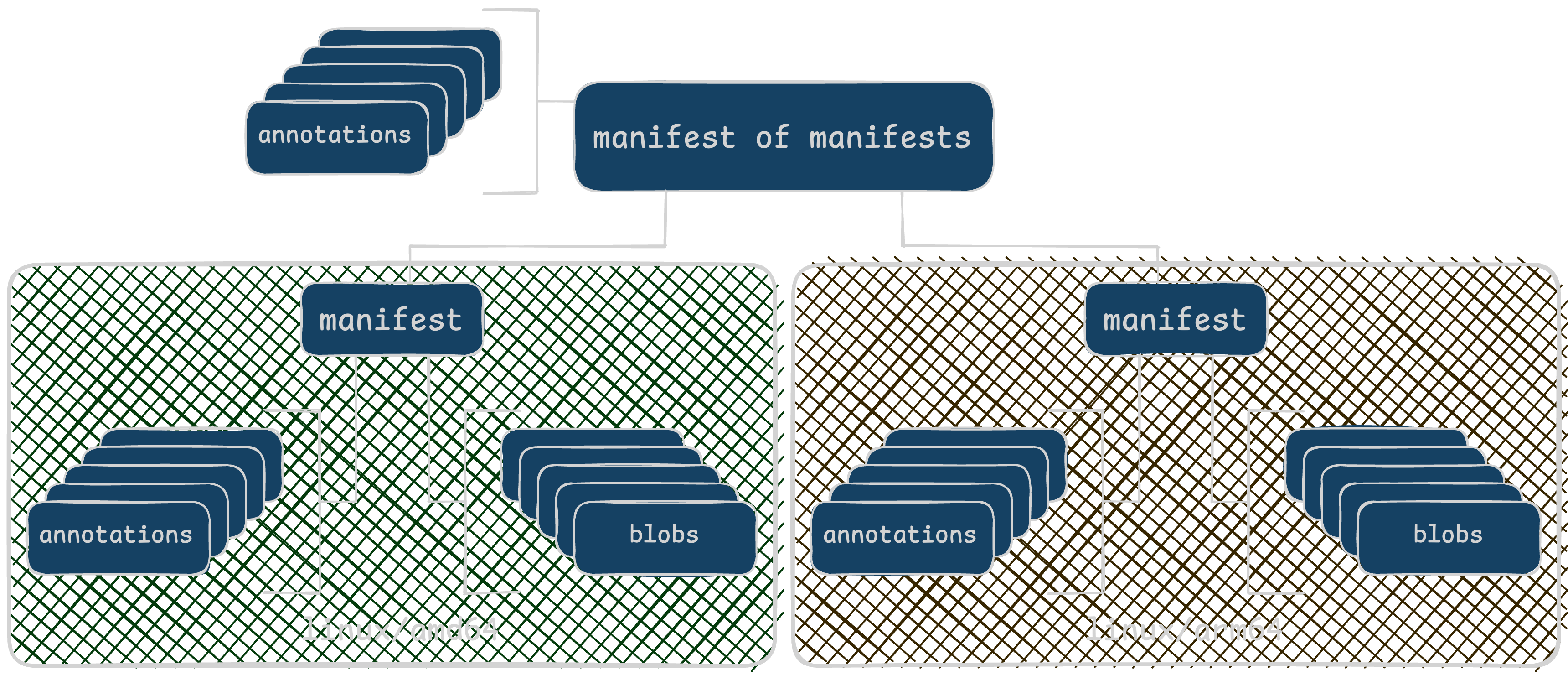

Back to our magic translation between image-name:tag to one of many possible architectures. Python is a bit scary because it has many different architectures it uses, so let’s use a simpler two-architecture example. The filesystem contents and annotations should vary by CPU type, but they all get referred to as image-name:tag. It looks like this:

adding additional architectures isn’t that difficult though

adding additional architectures isn’t that difficult though

When you run docker pull image-name:tag, it’ll fetch the “manifest of manifests”. From there, your container runtime tool (docker, skopeo, etc) will download only the blobs you need for the requested architecture. Unless otherwise specified (like the --platform argument), the download will be whatever CPU architecture it’s running.

If your MirrorBot 9000 is running on

x86_64, then it’ll always and only download that unless it’s explicitly told to do otherwise.

An example

Using this big gnarly query for GitHub to search for examples. We’ll use github/github-mcp-server because it’s in the front page.

Let’s look at the manifest, dismissing build attestation for now. Using ghcr.io/github/github-mcp-server:v0.6.0, let’s look at the manifest:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

{"schemaVersion": 2,

"mediaType": "application/vnd.oci.image.index.v1+json",

"manifests": [{

"digest": "sha256:5d3805606422d8c4984a0bdabbd64aca8ce8c48b440ae32aa3639e59076536f9",

"platform": {

"architecture": "amd64",

"os": "linux"

}},{

"digest": "sha256:62684e9098550da194d7155d69af2499c402cc57e2302173a148a4ce348a0021",

"platform": {

"architecture": "arm64",

"os": "linux"

}},{

"digest": "sha256:0bd74dcadfb6c0586a6adfbe83382b1aa22e118544142b1b8dc93dac7349bb59",

"annotations": {

"vnd.docker.reference.digest": "sha256:5d3805606422d8c4984a0bdabbd64aca8ce8c48b440ae32aa3639e59076536f9",

"vnd.docker.reference.type": "attestation-manifest"

}},{

"digest": "sha256:9461ba550d91bb7abaad1323390fe5a114f042bfd76b2cfe3a273ef932481ae6",

"annotations": {

"vnd.docker.reference.digest": "sha256:62684e9098550da194d7155d69af2499c402cc57e2302173a148a4ce348a0021",

"vnd.docker.reference.type": "attestation-manifest"

}}]}

Using that information, let’s try to verify the image a couple different ways.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# ✅ verifying the "manifest of manifests" works on the tag

$ cosign verify \

--certificate-oidc-issuer=https://token.actions.githubusercontent.com \

--certificate-identity=https://github.com/github/github-mcp-server/.github/workflows/docker-publish.yml@refs/tags/v0.6.0 \

ghcr.io/github/github-mcp-server:v0.6.0

Verification for ghcr.io/github/github-mcp-server:v0.6.0 --

The following checks were performed on each of these signatures:

- The cosign claims were validated

- Existence of the claims in the transparency log was verified offline

- The code-signing certificate was verified using trusted certificate authority certificates

# ✅ verifying the "manifest of manifests" works on the shasum too

$ cosign verify \

--certificate-oidc-issuer=https://token.actions.githubusercontent.com \

--certificate-identity=https://github.com/github/github-mcp-server/.github/workflows/docker-publish.yml@refs/tags/v0.6.0 \

ghcr.io/github/github-mcp-server@sha256:db17de30c03103dd8b40ba63686a899270a79a1487d2304db0314e5c716118cf

Verification for ghcr.io/github/github-mcp-server:v0.6.0 --

The following checks were performed on each of these signatures:

- The cosign claims were validated

- Existence of the claims in the transparency log was verified offline

- The code-signing certificate was verified using trusted certificate authority certificates

# ❌ but verifying any one of the architectures doesn't

$ cosign verify \

--certificate-oidc-issuer=https://token.actions.githubusercontent.com \

--certificate-identity=https://github.com/github/github-mcp-server/.github/workflows/docker-publish.yml@refs/tags/v0.6.0 \

ghcr.io/github/github-mcp-server@sha256:62684e9098550da194d7155d69af2499c402cc57e2302173a148a4ce348a0021

Error: no signatures found

error during command execution: no signatures found

Pop quiz: Where does Cosign sign?

Wherever the upstream project signs!

By default, Cosign signs the highest level manifest - that “manifest of manifests” or “fat manifest”.

There is a way to sign all the “lower” manifests using the --recursive option when Cosign signs the finished artifacts at build. Not every project does this. Many problems with “image ingestion” come from not understanding how the upstream projects are signing their work.

If the MirrorBot 9000 is only pulling in one/some of the architectures built for

image-name:tag, verification will fail without that top manifest too.

It isn’t only a GitHub Actions problem. Since that’s currently the most common system using Sigstore, it’s easier to find examples. Literally any OIDC provider can follow the same workflow and run into the same problem. The same problem also exists if you’re using long-lived keys to sign with Cosign instead.

You need to know the identity of who/what signed the artifact in order verify it was signed by who you thought. There’s a handy flag in Cosign called

--certificate-identity-regexpthat’ll allow regular expressions for identity, but be sure to validate that the regex is matching what you think it is.

Building multi-arch better

There’s a ton of tools to choose from to build a container image. The good news is this choice doesn’t matter. Cosign works regardless of what built the artifact it’s signing. Since most releases (and most signing) take place in automated continuous integration systems, this choice does matter in how we sign all the things.

Here’s a quick shell example:

1

2

3

4

5

images=""

for tag in ${TAGS}; do

images+="${tag}@${DIGEST} "

done

cosign sign --yes ${images}

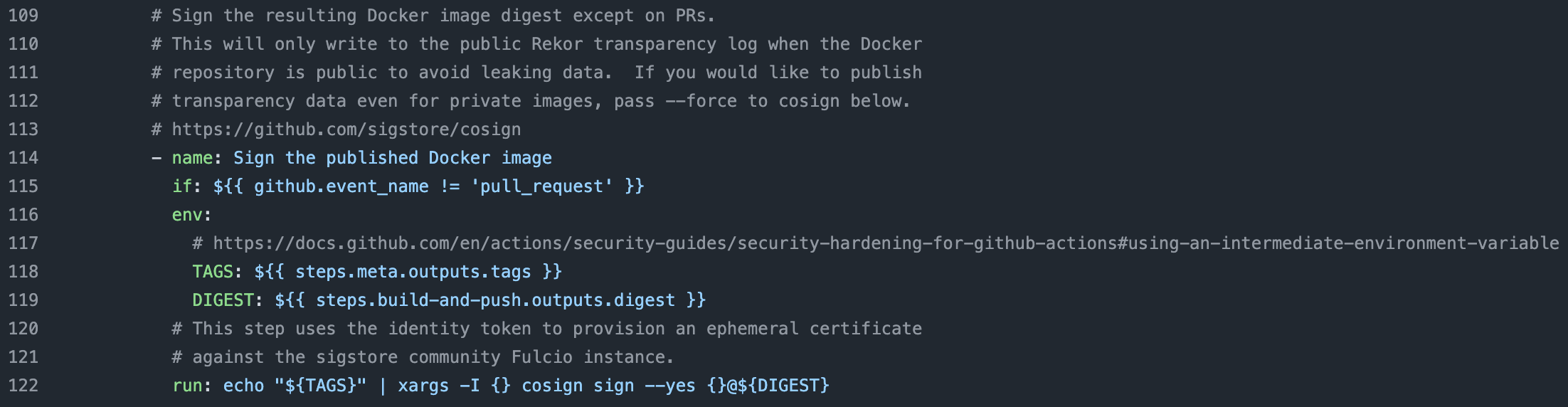

GitHub Actions

Most folks aren’t using Bash in builds directly anymore … instead we pipe that Bash script into YAML. 😅

Using our example from above, here’s that image built in GitHub Actions. It’s signing that top-level manifest and that’s it. This is perfectly acceptable!

repository in GitHub , click image to expand

repository in GitHub , click image to expand

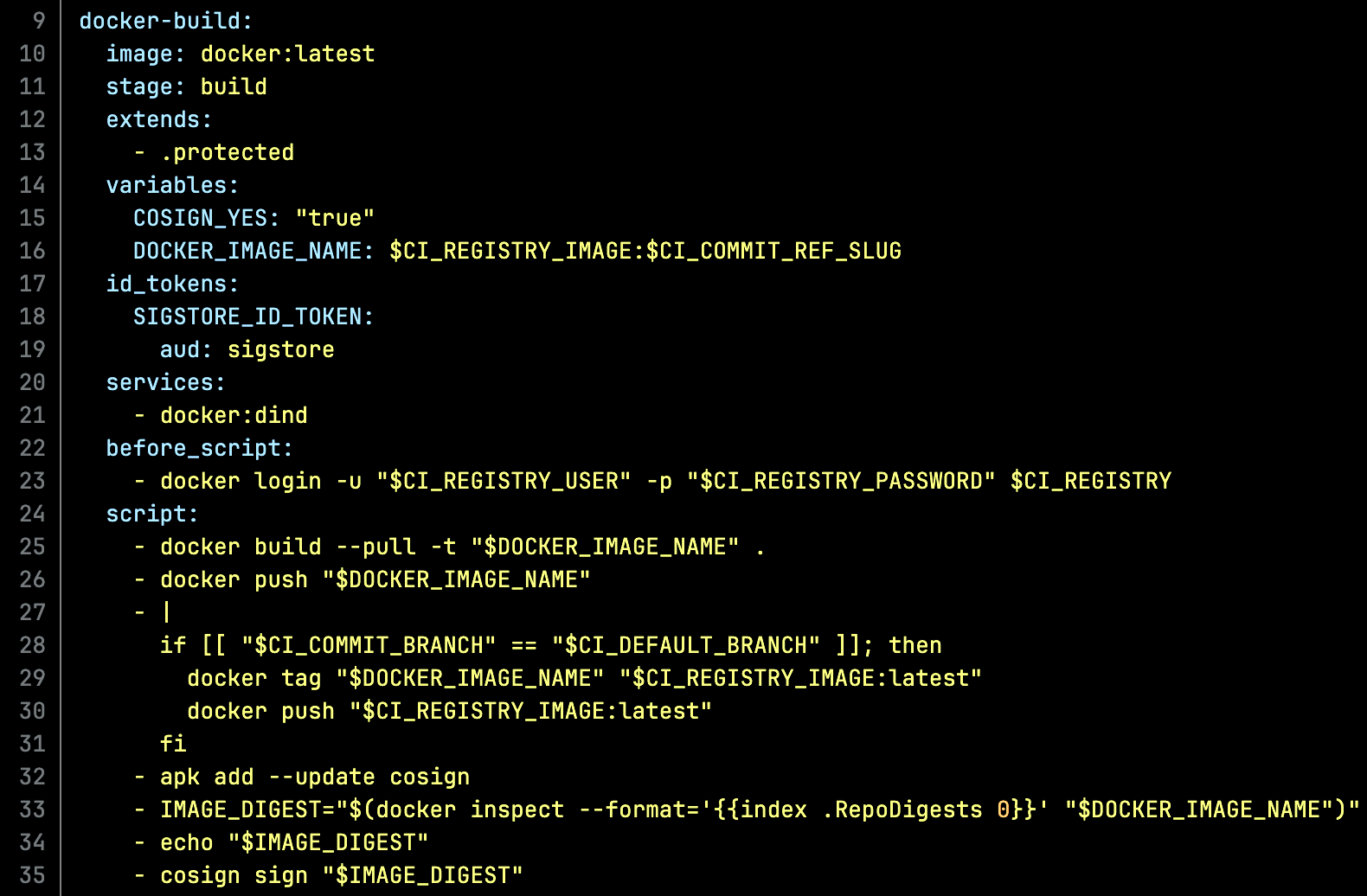

GitLab CI

While GitHub is great and by far most popular on the open internet, I work mostly with public sector folks. For the most part, the ratio flips to GitLab when we’re self-hosted off the internet. Fortunately it’s just as easy because it too is YAML templating around shell scripts.

repository in GitLab , click image to expand

repository in GitLab , click image to expand

How to bring in verified containers

Our first “container image ingest systems” to bring in open source software from the internet ended up looking a lot like this (in pseudo code).

docker pull thing:tag- scan it with

things - verify it with

curl url-of-sha-file | sha256sum file-name - … install some things, add some ssl certs, whatever else …

- retag the image and push to internal registry

It was more than likely written in Bash on a cron job, or run through something like Run Deck. The first commercial “MirrorBot 9000” iterations looked a lot like this too.

This started to break with the adoption of multi-architecture images - partly due to verification, but mostly due to not needing every build target too. So we changed our script around into something more like this:

1

2

3

4

5

6

7

8

cosign verify thing:tag

for PLATFORM in LIST_OF_PLATFORMS; do

docker pull thing:tag --platform=$PLATFORM

# scan with scanner

# other things here

# retag and push to internal

done

Optionally, create a new multi-architecture manifest for the internal registry. Some tools have a manifest create command to do much of this for you.

Early “internal mirrors” didn’t support multi-architecture images out of the box, leading to some tech debt / difficult to change patterns. The most common one is breaking a single image into multiple ones - for example, python:latest becomes python:latest-amd64 and python:latest-arm64. It can be hard to manage drift between versions, but it is at least explicit. Once it’s in place, it could also be more effort than it’s worth to change.

Offline verification is probably not what you wanted

Offline verification seems like ✨ magic ✨ (no, it’s OIDC). However, it’s probably not going to answer the questions folks ask about. During the “retag” or other compliance driven additions like adding labels, configurations like SSL certificates, or other changes means that offline verification no longer works when the internal compliance process changes the image. In this case, verify the original, re-sign it once it’s been changed if needed.

In the real world

Some terrible things found in the field on those “magic black boxes” include

- not knowing which upstream is getting pulled from (eg, Quay vs DockerHub, confusing GCR and GHCR is common too)

- the upstream source can be silently set by endpoint teams, overwritten by various programs, differ by docker context, etc.

- disabling SSL verification

- shell flags that do nothing

- pinning to ancient digests and never updating them, so

latestis from years ago

Ingesting software from the internet regularly at any appreciable scale means knowing

- what software the company is using

- and all of its’ dependencies

- how that software is built

- where it’s being signed

- verifying it in the “mirrorbot 9000” before bringing it in

Turns out software supply chain security is a lot of inventory. Inventory is hard work - even when the images aren’t magic!

tl;dr - how to be better

Adjust your company processes according to the upstream if needed. Some best practices I’ve seen work well for larger companies are the same as the best practices for everyone else.

- Sign both tags (mutable) and digests (immutable), using

--recursivefor multi-architecture images if possible - Sign at build time as part of the same workflow / CI run

- Use OIDC if you can because it’s literally free sparkles (can’t leak keys you don’t have)

- Don’t trust “magic black boxes” - look inside to sleep soundly at night.

However, if you’re the upstream, I don’t have much to ask for. Upstream doesn’t owe the folks using that software anything. If you’re feeling generous though, including the copy/paste commands for Cosign to verify for anything you’re signing is pretty helpful for everyone - other maintainers, users, and contributors. Sigstore is great, and it’s easier than a ton of other ways we’ve had to sign and verify artifacts … but it’s still new to so many folks. 💖