Writing tests for your Actions runners

Last time, we built a pipeline to test our custom CI container images on each proposed change. It built and launched a runner, dumped some debug information to the console as a “test”, then removed itself. This is valuable, but definitely not robust enough to save serious engineering hours.

flowchart LR

A(Build<br>`image:test`) --> B(Push to registry)

B --> C(Deploy to<br>test namespace)

subgraph Does it do the things we need?

C --> D(Run tests!)

end

D --> E(Delete the deployment)

To do that, let’s spend more time in the highlighted box of the above diagram. We’ll write comprehensive tests for our runners, using GitHub Actions to test itself.

Many of our basic infrastructure availability tests can be run as composite Actions for reusability across different custom images. Mostly, they wrap simple shell scripts to verify that something does or does not work as expected using the most basic utilities wherever possible. These are all stored in the ~/tests directory of our runner image repository (link ), then called by the workflows that build/test changes to the runners on pull request.

Test #0

The first test isn’t explicitly a test - it’s the prior PR checks that validate the image:

- can be built (example )

- deployed via a Helm chart

- initialize

- connect to GitHub (cloud or server) to receive tasks to run

I’ve never been a fan of implicit testing, especially those that have multiple steps, but this case doesn’t have a succinct or tidy way to avoid it. The reason behind the structure of the build/deploy/test workflow is to try to isolate failures in each of those discrete steps.

How to write a test

GitHub Actions, like most infrastructure task dispatch / automation tools, uses shell exit codes1 to determine success or failure. Any non-zero status of a command is interpreted as failure and will report as such back to the orchestrator, failing our Actions job.

Here’s an example of using that principle to verify that Python 3.10 is available for our jobs to use:

1

2

3

4

5

6

7

8

9

10

11

name: "Python 3.10 check"

description: "Ensure Python 3.10 is available"

runs:

using: "composite"

steps:

- name: "Python 3.10 is available"

shell: bash

run: |

python3 -V | grep "3.10"

It’s printing out the Python version, piping that output into the grep command, which then looks to see if the string 3.10 is there. If it is, the exit code will be 0 and GitHub Actions will be a happy camper. If not, the step fails and we should probably investigate.

This gets tricky when you try to prove a negative. It’s not wise to give sudo privileges without a really good reason, but passing a test on “failing as expected” isn’t intuitive either. Here’s an example using exit codes to verify that sudo must fail:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

name: "Sudo fails"

description: "Make sure `sudo` fails"

runs:

using: "composite"

steps:

- name: "Sudo fails"

shell: bash

run: |

if [ $(sudo echo "test") == "0" ]; then

echo "sudo should fail, but didn't"

exit 1

else

echo "sudo failed as expected"

exit 0

fi

In practice, this test also includes verifying that the UID2 and EUID that the agent is also non-privileged. It does no good to remove the sudo executable if you’re already running as root. 😊

Since failure is the desired outcome, the test is wrapped with a simple if/else/fi loop that swaps 0 in for any non-zero (desired) value. Using echo statements to be explicit about the conditions needed to pass/fail should improve long-term maintainability, as does keeping the test script scoped to a single task.

Using a test in a workflow file

Once we have the test written, using it as a PR check is simple. For the testing step in the workflow we built out last time, add the following (for each test you want to run):

1

2

3

4

5

6

7

8

9

10

11

- name: Checkout # needed to access tests within the repo

uses: actions/checkout@v4

- name: Print debug info

uses: ./tests/debug

- name: Sudo fails

uses: ./tests/sudo-fails

- name: More tests go here

uses: ./tests/yet-another-test

This keeps our tests with the rest of our files that generate the images for easy collaboration within the project. GitHub Actions, as a whole, don’t need to be part of the marketplace in order to be helpful. Since these are more than likely internal and not public, they’re not listed on the marketplace in their own individual repositories. They can be copied in to any project wanting to use them.

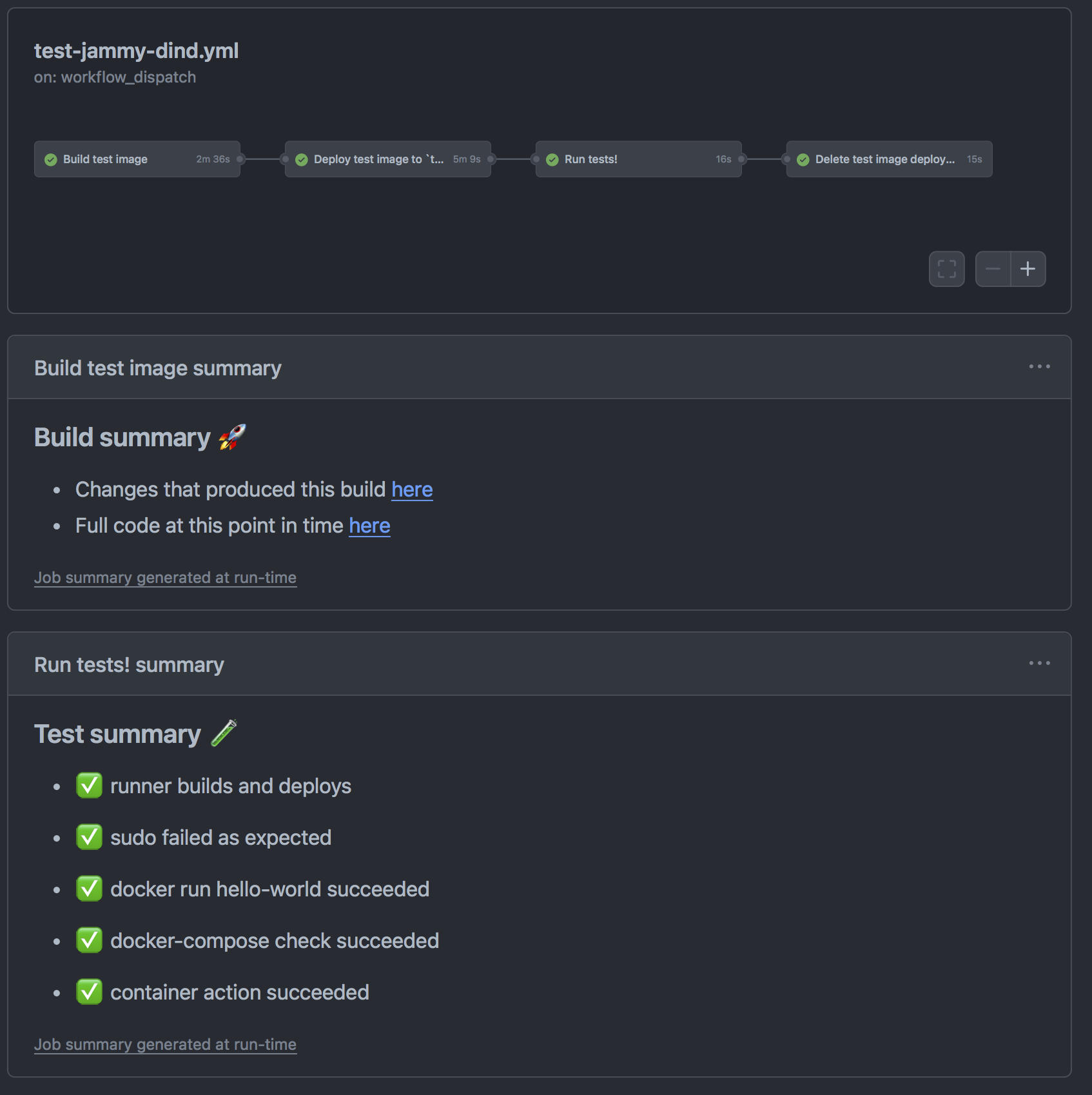

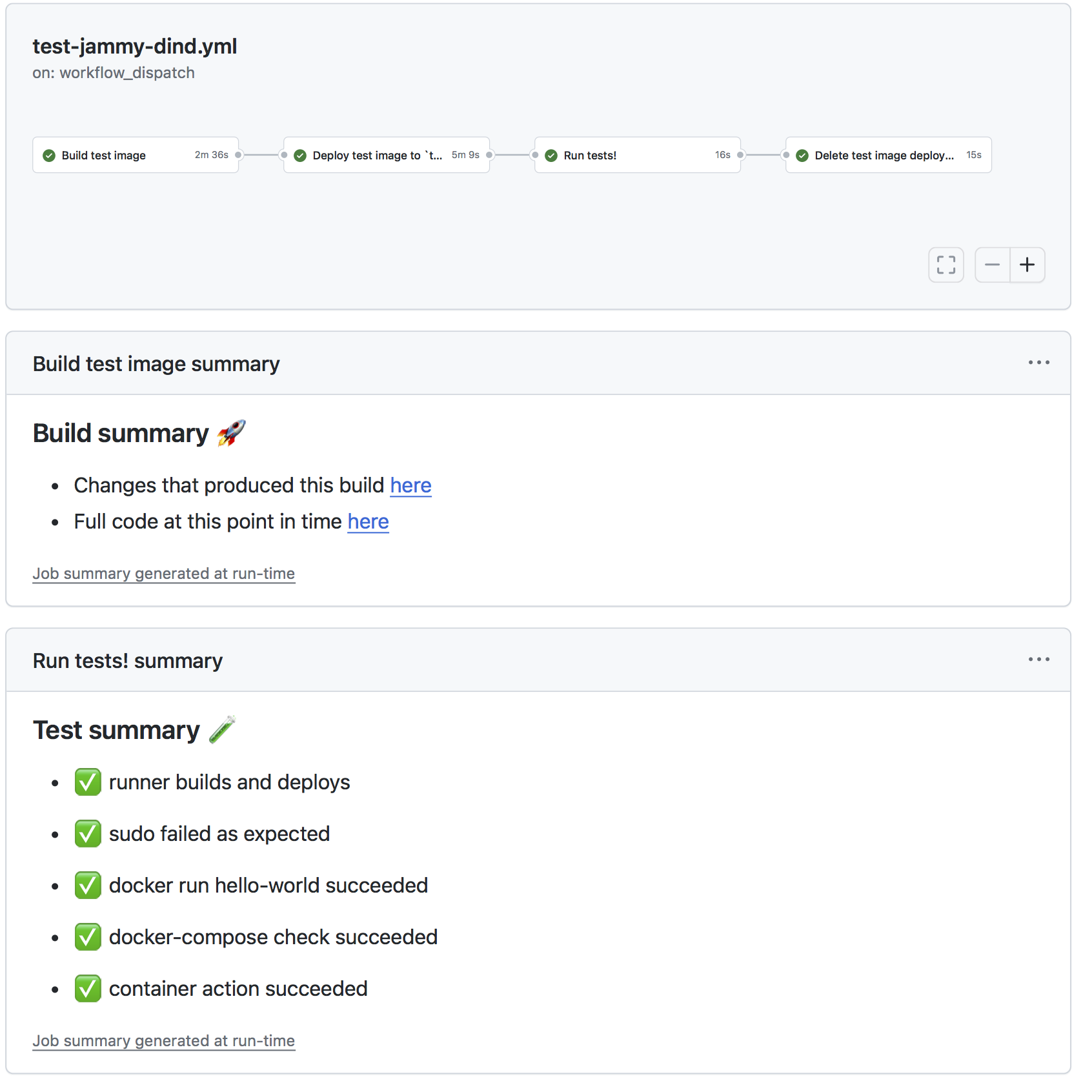

Human-friendly summary of tests

Lastly, having a friendly “at-a-glance” view of all the tests that are performed is increasingly important as the number of tests increase. Isn’t this beautifully simple to figure out all is alright or which one needs attention?

To do it, we can set step summaries to make that pretty output within the tests for each runner. Setting a header in the first lines of ~/.github/workflows/test-RUNNERNAME.yml (example ) is done in that file like so:

1

2

3

4

5

- name: Setup test summary

run: |

echo '### Test summary 🧪' >> $GITHUB_STEP_SUMMARY

echo ' ' >> $GITHUB_STEP_SUMMARY

echo '- ✅ runner builds and deploys' >> $GITHUB_STEP_SUMMARY

From here, edit the script running each test to also output a bullet point summary in Markdown, as shown here in ~/tests/docker/action.yml:

1

2

3

4

5

6

7

8

9

- name: "Docker test"

shell: bash

run: |

docker run hello-world

if $? -ne 0; then

echo "- ❌ docker run hello-world failed" >> $GITHUB_STEP_SUMMARY

else

echo "- ✅ docker run hello-world succeeded" >> $GITHUB_STEP_SUMMARY

fi

All about the existing tests

There’s a handful of tests already written and in use in the kubernoodles/test directory for the images built in that repository. Here’s a quick summary of what they do, if you’d like to copy them into your own project.

Debug info dump

(link ) This test dumps commonly needed debugging info to the logs, including:

-

printenvfor all available environment variables -

$PATHto show the loaded paths for the executables called - Information about the user running the agent from

whoamiand returning the UID, groups and their GIDs

This step outputs information without checking any values. Pipe the output to grep to look for something if it’s important to do here.

Sudo status

(link ) Verifies that sudo fails, that the process can’t run as user ID 0 (running as or effectively as root), and isn’t a member of commonly privileged groups.

(link ) Verifies that sudo works.

💡 Since these two test opposite things, pick the one applicable to your use case.

Docker

(link ) Prints some information to the console logs about the state of docker and the bridge network that it uses (so as to check MTU, among other things). It will then pull and run the hello-world image. It also checks that Compose is available.

Podman

(link ) This test is the same general container test as the Docker tests, but using the Red Hat container tooling instead. It does the following:

- prints common debug information to the console

-

podmancan pull and run thehello-worldcontainer -

dockeraliases work, so these can be a reasonably drop-in replacement -

buildahis installed and available -

skopeois installed and available -

podman composeis installed and available

Container Actions work

(link ) This test builds and runs a very simple Docker Action . It prints a success message to the console before exiting. Run this to verify that this class of Actions work as expected.

Arbitrary software

This pattern extends well to any other arbitrary software you want installed, building slightly on the example of Python we opened with. Follow the same pattern of using exit codes to either find the executable with which or call it with the version flag for that program, pipe to grep if you’re checking for specific versions or other info, etc.

Finishing that version check for Python 3.10 would look like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

name: "Python 3.10 check"

description: "Ensure Python 3.10 is available"

runs:

using: "composite"

steps:

- name: "Python 3.10 is available"

shell: bash

run: |

python3 -V | grep "3.10"

if $? -ne 0; then

echo "- ❌ Python 3.10 not available" >> $GITHUB_STEP_SUMMARY

else

echo "- ✅ Python 3.10 works!" >> $GITHUB_STEP_SUMMARY

fi

Conclusions

As we’ve seen so far, this pattern of using containers in Kubernetes similarly to virtual machines leads to large images. Balancing these large images means having more images to maintain. Comprehensive test coverage of what’s necessary for each image to function allows this maintenance to be easier and is another stepping stone to automating more of this infrastructure. What better system to use to than to have your GitHub Actions runner image(s) test itself?

Next time

🔐 Managing the attack surface of our runners by removing unnecessary software and services in reducing CVEs in actions-runner-controller

Footnotes

-

The Linux documentation project book on Advanced Bash Scripting has a ton of information about exit status codes and a breakdown of the ones with special meanings . ↩

-

More about user IDs (

uid), group IDs (gid), and effective user IDs (euid) on Wikipedia and Red Hat’s blog . Long story short - if any of these values are0, it’s running as root or has root permissions. ↩