Images have their own risks, too

You’ve locked down your runtime, orchestrator, and hosts. Now how about what’s actually running inside of those containers?

Container images are too often treated as black boxes until something goes wrong. Different scanning tools can give wildly different results, vulnerabilities hide in plain sight, and that “secure” base image might do no good if you’re configuring it poorly.

Welcome to the last part of our gentle intro to container security, where some risks are trivial to fix and others are baked into your entire application’s logic.

This is the last part of a series put together from client-facing conversations, conference talks, workshops, and more over the past 10 years of asking folks to stop doing silly things in containers. Here’s the full series, assembled over Autumn 2025. 🍂

Image have vulnerabilities, just like virtual machines do

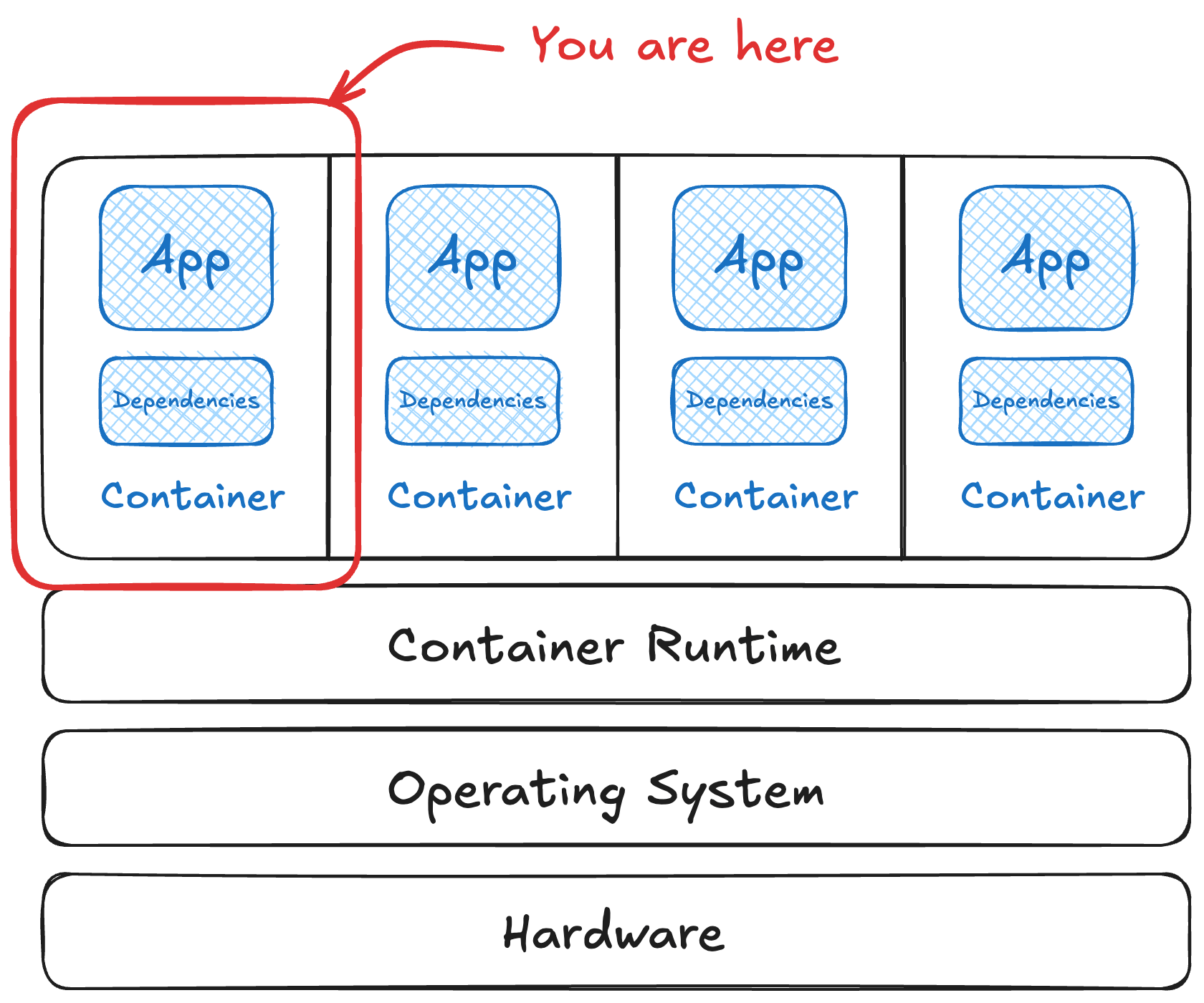

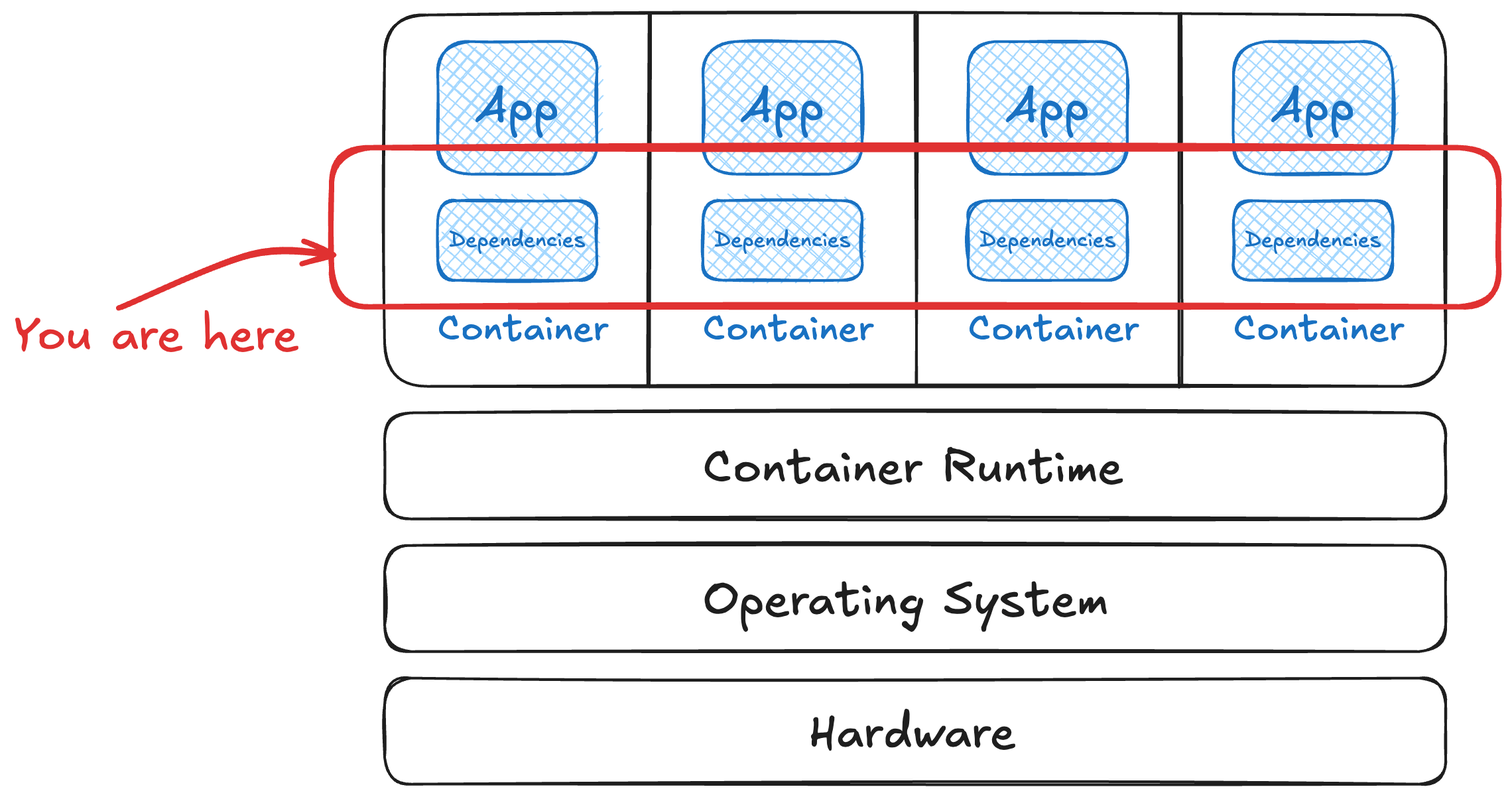

A container is a myth. It’s a series of filesystem snapshots to run a process (or multiple processes). This allows them to carry along their own dependencies. Those dependencies can have known vulnerabilities.

🪄 There is no magic to vulnerability remediation specific to containers. 🪄

The problem is in treating our container builds like we did our virtual machine builds. There’s a big(ish) base image that has a package manager, software for setup or builds, debug tools, and more. It’s just more stuff to collect more vulnerabilities.

risks in images are often mostly due to all the dependencies in the image

risks in images are often mostly due to all the dependencies in the image

Used this way, containers effectively multiplied your virtual machine fleet. Linearly scaling configuration management and vulnerability reporting didn’t make life great. Discrete small services to run in a cluster aren’t quite that easy to set up.

As an example, let’s say you had a virtual machine with 3 services installed that had 100 known vulnerabilities in a scanning tool. Breaking that into 3 containerized services, each with all that extra software to update/install software and debug and all, means you’ll have closer to 300 findings in a dashboard - not the original 100. This comes from treating containers like VMs.

More findings, regardless of if they’re

- Exploitable

- Relevant

- Mitigated elsewhere

- Not in use

- Patched soon

It’s noise that makes focus on real problems difficult.

😈 Exploit example - In this lab on chroot escapes, we have to escalate privileges twice - first inside the container, then again on the host machine. While I assumed the first escalation to focus on the escape onto the host, this is a great exploit for a known vulnerability in a given container.

Much of this comes down to “holding it wrong” for containers. It’s a simple package format, not an isolation boundary and shouldn’t be used like VMs. Instead, patch things as fast as possible and have as little as you can in any one container.

How do you know what you know?

This all assumes perfect knowledge of what is or isn’t inside of a container. That’s frequently not the case.

“Scanners” look through the container’s files to look for all sorts of things. Secrets, like tokens or keys is somewhat simple, as it’s looking for specific patterns or high-entropy strings. (more on that below too)

What software is installed is much harder. It requires looking for metadata files like package databases (such as yum or dpkg) or lockfiles (like requirements.txt), then parsing what is said to be in there against databases of known vulnerabilities. That leads to two more complex problems - false positives and negatives.

False positives

The “most insecure image ever made” (GitHub ) isn’t insecure at all. It’s an image that only has a package database that says it has tons and tons of vulnerable packages installed.

1

2

3

4

5

6

7

.

├── etc/

│ └── os-release

└── lib/

└── apk/

└── db/

└── installed

But there’s no files to be vulnerable at all. 🤷🏻♀️

1

2

3

4

5

6

7

8

9

10

11

12

13

14

~ ᐅ docker inspect -f "{{ .Size }}" ghcr.io/chainguard-dev/maxcve/maxcve:latest | numfmt --to=si

2.0M

~ ᐅ grype ghcr.io/chainguard-dev/maxcve/maxcve:latest

✔ Vulnerability DB [updated]

✔ Loaded image ghcr.io/chainguard-dev/maxcve/maxcve:latest

✔ Parsed image sha256:a2608ac82878e37acd255126678d47f44546ba214fb8d0fa12b427ff40b78230

✔ Cataloged contents da3c717365723bf3294968c4b9e36a24a60cfd0ba58a16ff682240a280a7a747

├── ✔ Packages [44,346 packages]

├── ✔ Executables [0 executables]

├── ✔ File digests [1 files]

└── ✔ File metadata [1 locations]

✔ Scanned for vulnerabilities [1069068 vulnerability matches]

├── by severity: 52151 critical, 239564 high, 248563 medium, 20525 low, 0 negligible (508265 unknown)

Nonetheless, it clocks in with an astonishing million CVEs … that aren’t there. 🫥

False negatives

Scanner results are unreliable when you play with the package metadata in the image.

Using a small demo application, let’s mess with scanners to make our images look “more secure” than they are. This image was clean a few months ago when it was built, but it’s now accumulated known vulnerabilities.

Changing the Dockerfile to remove /etc/os-release and package metadata (full file ), the exact same application and functionality now returns fewer to none using the same scanner.

| Image | Trivy 0.67.2

|

Grype 0.104.0

|

|---|---|---|

| unmodified application image | 13 vulnerabilities 2 high, 9 medium, 2 low |

24 vulnerabilities 4 high, 7 medium, 13 unknown |

| “slimmed” application image | 0 vulnerabilities | 10 vulnerabilities 1 critical, 2 high, 7 medium |

Nothing was removed except metadata that a scanner needs to read the container. The exact same software has the same known vulnerabilities. They all get counted differently based on the metadata present in the image. Reproducibility directions are in the footnotes.

As an aside, those CVEs aren’t the biggest weakness in the application. The vulnerability is a command injection on user input (here ), which isn’t something that’d be found by a container scanner. It should be found by any static analysis tool, though. Container scanners are only one part of your security profile - telling you as much data as it can about the contents of the image.

Don’t tamper with package metadata to alter the security scanner results. Removing evidence doesn’t remove the crime. 🫥

Secret mismanagement during builds

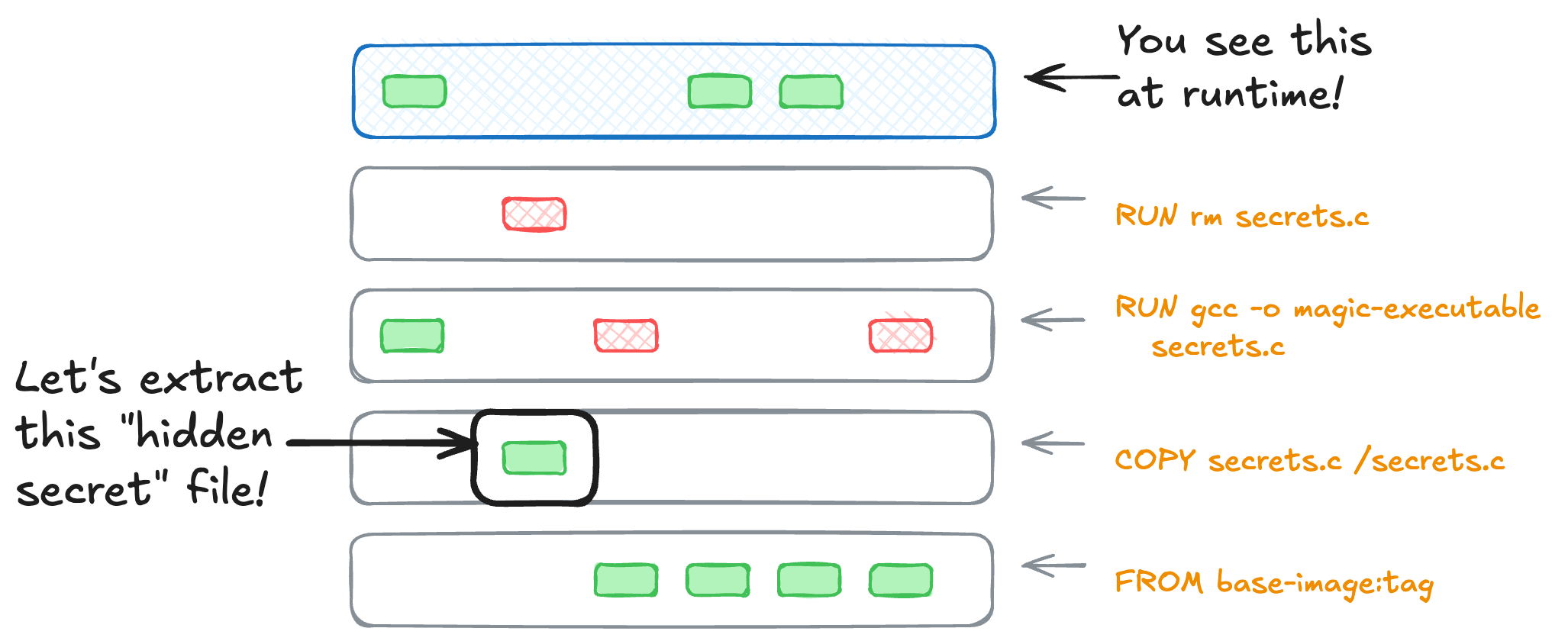

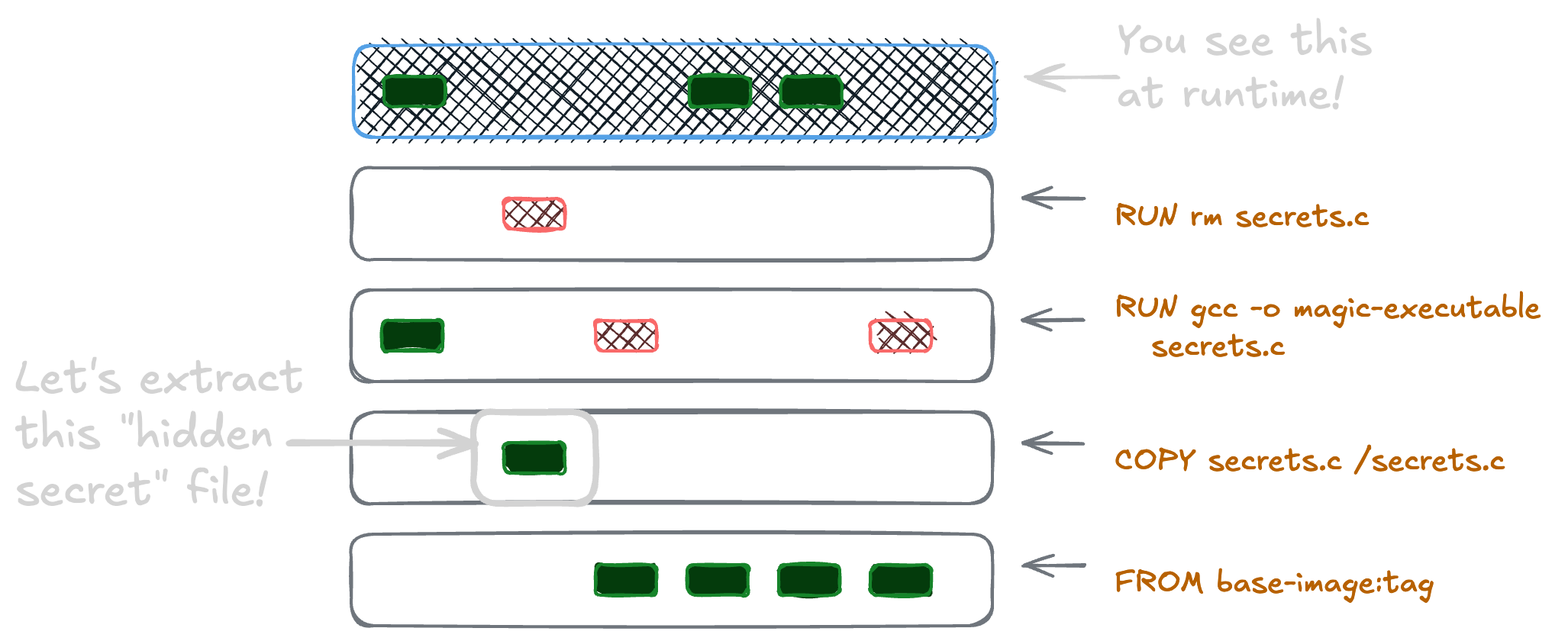

Finding secrets in container images is a straightforward task. Containers are a series of filesystem snapshots. Even if you remove the secret later on, the fact that it was there means it can probably be recovered.

even removed in a later build step, secrets can persist and be recovered from earlier layers

even removed in a later build step, secrets can persist and be recovered from earlier layers

Luckily, many container scanners and registry tools look for patterns that might be a secret, such as API tokens or private keys. This is one of those parts of container security that’s getting better and better as time goes on.

There’s no way to make a leaked secret a secret again. Change your build to not do this, rotate the old credentials, and move on. 🤷🏻♀️

Images can be insecurely configured, too

There’s an innumerable way to do insecure things in container configuration. Some frequent misconfigurations to look out for here include:

- Mounting directories that could include

- sensitive content (such as

/home/) - system-wide configuration files (such as

/etc) -

way too much content (like

/)

- sensitive content (such as

- An entrypoint script (that you control) that calls another script on the internet (that you don’t)

- Running the container as a

--privilegedprocess on the host and/or running the process inside of the container as root (writeup on enumerating these privileges) - Many containers still have shells and package managers and other tools that can be used for living off the land attacks, privilege escalation, and more.

-

ONBUILDis an obscure Docker keyword worth knowing. It allows you to embed instructions to execute if this image is being used in a later build. At least look at if it exists in the image layers you build on. While I’ve never seen anything outright malicious, it’s definitely led to some unexpected behavior from unknown dependencies in it.

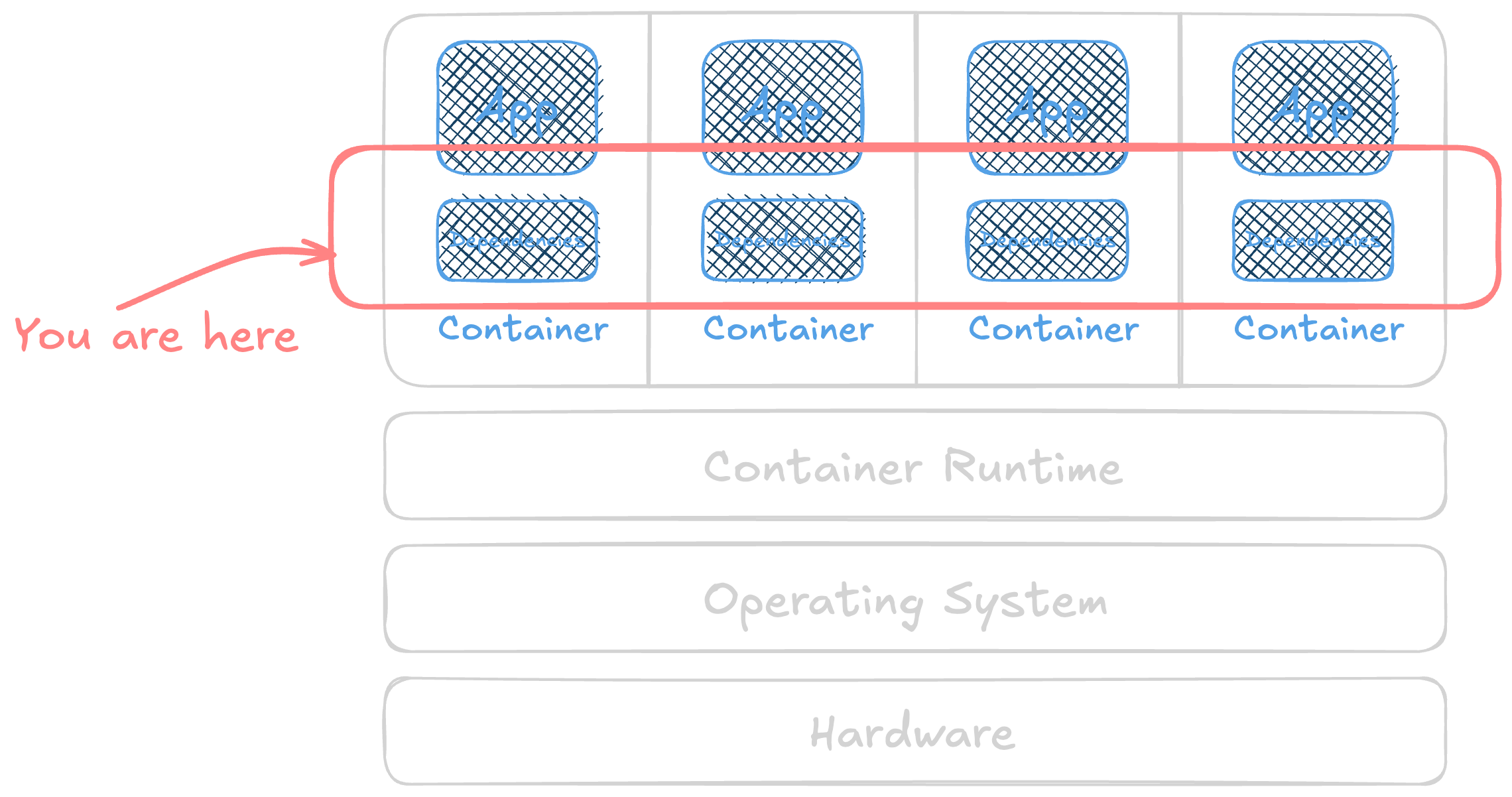

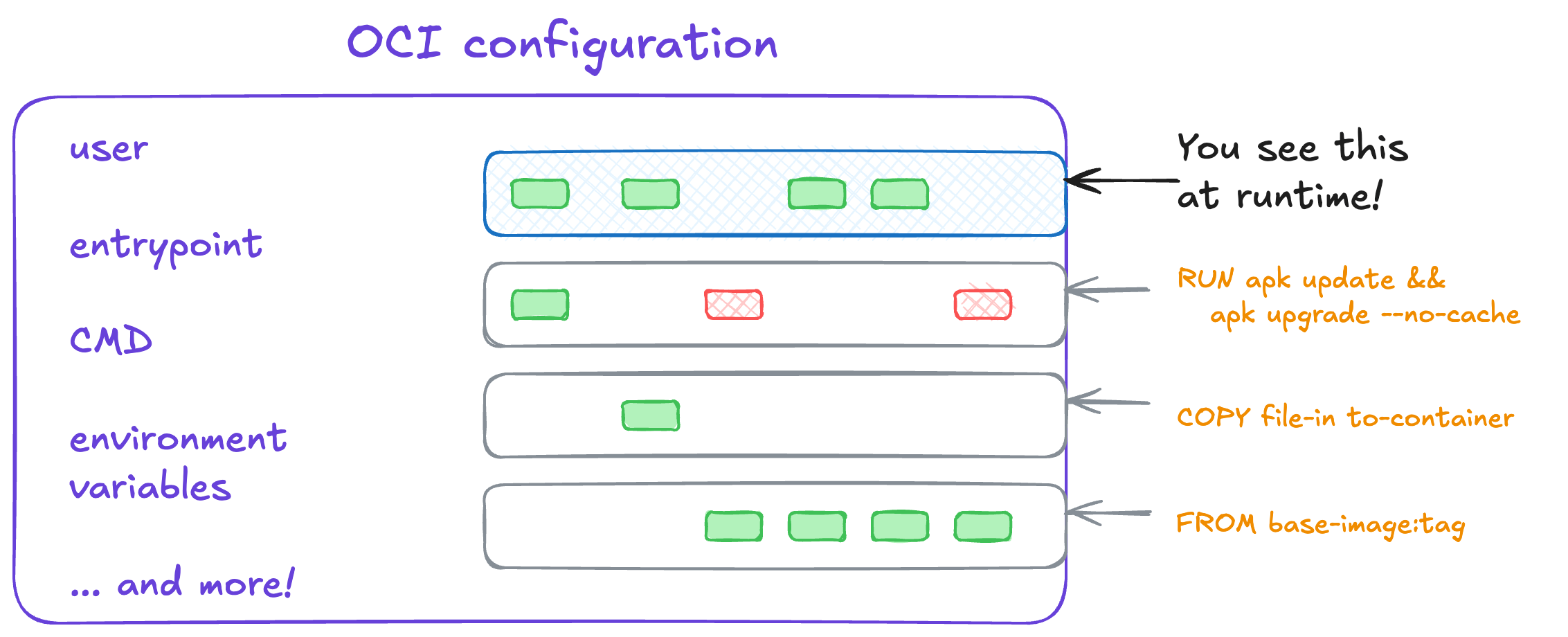

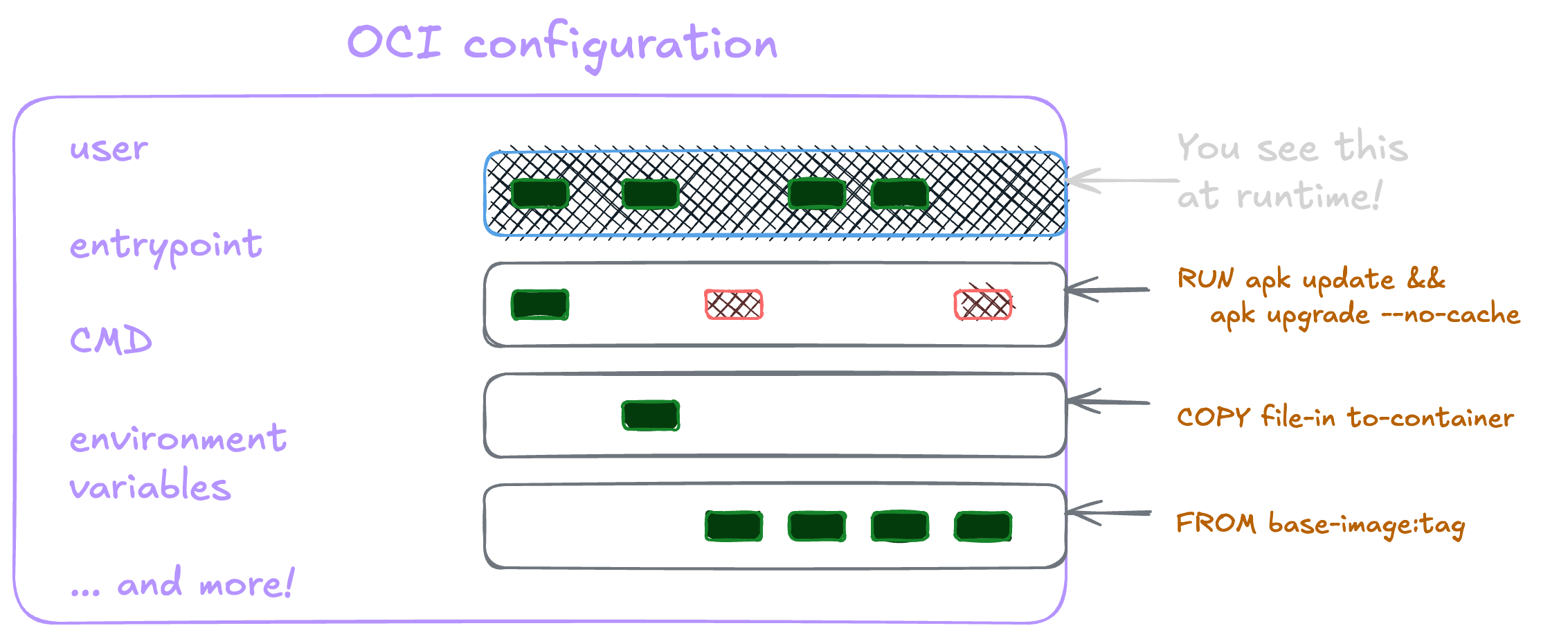

all the configuration included in a container can also be insecure too

all the configuration included in a container can also be insecure too

😈 Exploit example - Many of the container escape workshops use these misconfigurations to escalate privileges, escape to the host, or otherwise cause shenanigans.

There isn’t any particular vulnerability or configuration to watch out for. It all goes back to basic security principles of least privilege and the most difficult task of all - knowing which privileges are the minimum needed specific to the task at hand. 🤷🏻♀️

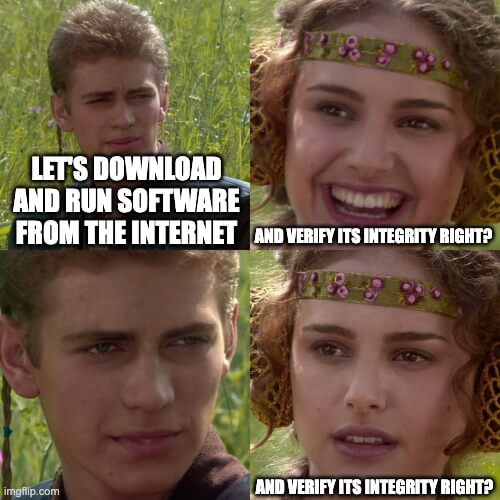

How do you trust?

Fun fact: anyone can push an image to DockerHub. 🤷🏻♀️

Not to pick on that one website though; the same is true of the whole internet.

When your build process assumes you’re pulling in finished artifacts (container images, npm/pypi/whatever-hot-new-ecosystem packages, executable binaries, etc.) as part of the build, it’s difficult to guarantee that <this code> built <this artifact>.

😈 Exploit example - Sadly we don’t have to search too long for these. JFrog did a study in 2024 that found about 20% of images on DockerHub contain some sort of malware. This could be embedded malicious software to steal credentials or other information, crypto-mining to steal compute time, and more. These studies continue to be put out by vendors in the space, showing the increasing scope of the problem (but also their product category to fix it … these are vendor white-papers after all).

There is good work done on supply chain security for open source code and artifacts, such as

- providing build attestations to link source code to finished product

- managed ephemeral build infrastructure as a service

- many ways to cryptographically sign artifacts so you know it hasn’t been tampered with

- and a whole lot more too

Let’s not forget about your code too

Security of a system relies on the whole of the system. Not everything you read in an industry white paper is relevant or important to your application.

All of the examples I use for container escapes in the wild have few known vulnerabilities if you build them. They’ll scan quite cleanly from a container scanner or dependency/configuration checker. Some have no shell to easily pivot. Some also don’t have root access to add to the challenge.

However, they all rely on a command injection vulnerability in the application I wrote to run in this reasonably hardened container to escape onto the host. Or in other words:

Application security

of a containerized application

is way more than the containers alone

Disclosure

I work at Chainguard as a solutions engineer at the time of writing this. All opinions are my own.

Footnotes

To reproduce this yourself, here’s the images:

- unmodified image, dockerfile =

ghcr.io/some-natalie/some-natalie/command-injection-noshell-noroot@sha256:c5637e002b0506c60b2c2ad2c20352ac9f3f50de6c2c136075c41d9bc695a07b - modified image, dockerfile =

ghcr.io/some-natalie/some-natalie/command-injection-noshell-noroot-nopkgdb@sha256:d8737d7d74d91c42eb07b895df840846e0e029ea8270de6436701ef5c091cdd0