A gentle introduction to container security

“Let’s move to containers” promised engineering simplicity, security, and easy scaling … but there was a catch. 🙊

The simplicity and security gains were only true if containers were used as intended. Turns out it’s really an entirely new system that replaced the equally complex old system, but with different tradeoffs to consider as we built new systems. That’s not a bad thing and it isn’t as complicated or scary as it sounds.

🧭 Let’s navigate the intersection of application security and containerization and systems design together. 🧭

This is part of a series put together from client-facing conversations, conference talks, workshops, and more over the past 10 years of asking folks to stop doing silly things in containers. Here’s the full series, assembled over Autumn 2025. 🍂

How did we get here?

I started as a sysadmin, deploying software on actual hardware in everything from server “closets” to datacenter racks. This had some problems, not limited to

- Hardware failures

- Resizing hardware to match the application’s workload

- Limited “cotenanting” of applications as they’d need to share system dependencies (eg, same version of Ruby)

- Juggling application workload needs to make efficient use of hardware resources 🤹🏻♀️

- Handling OS updates means taking the system(s) offline, making updates and failovers a skilled design

- Spending minutes praying it comes back up after rebooting 🙏🏻

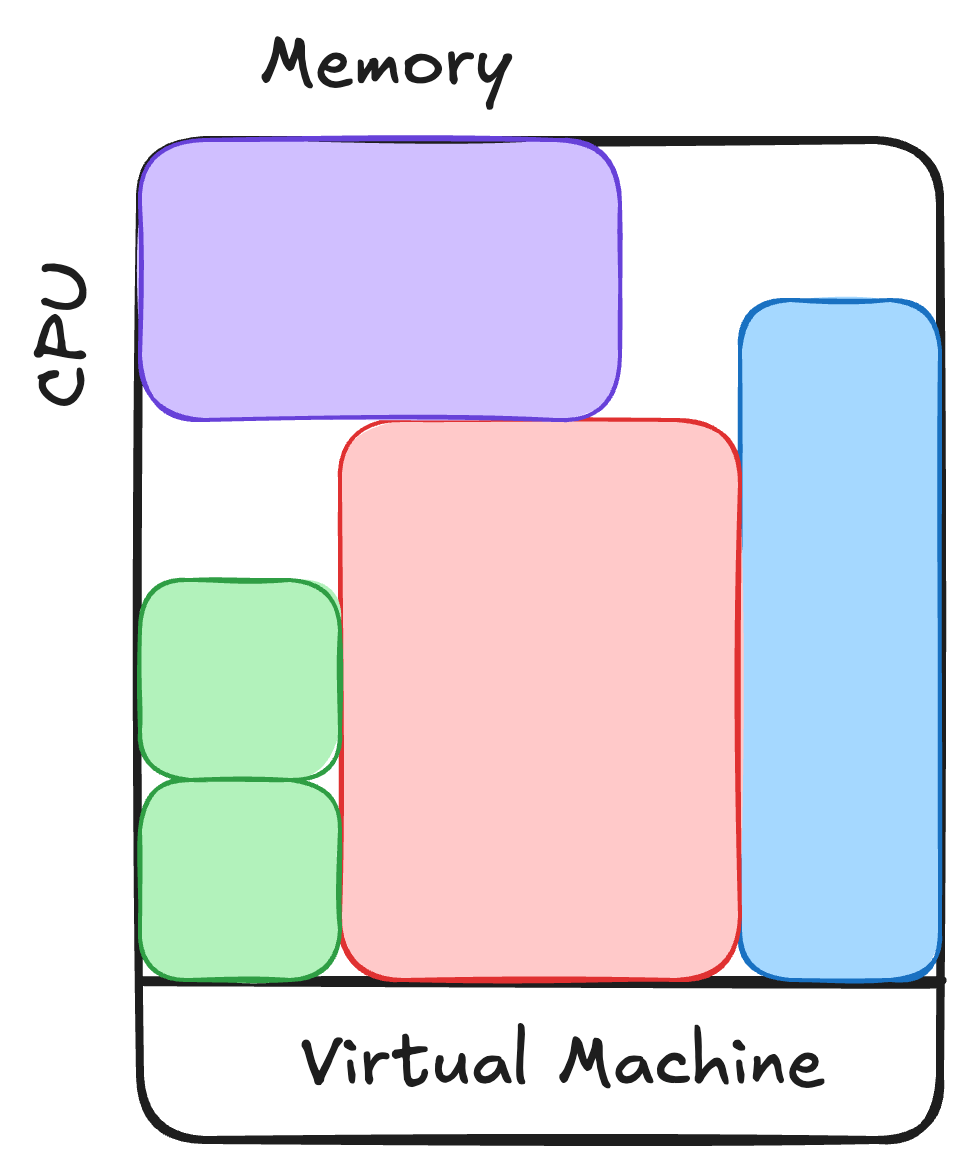

Virtual machines solved many of these problems.

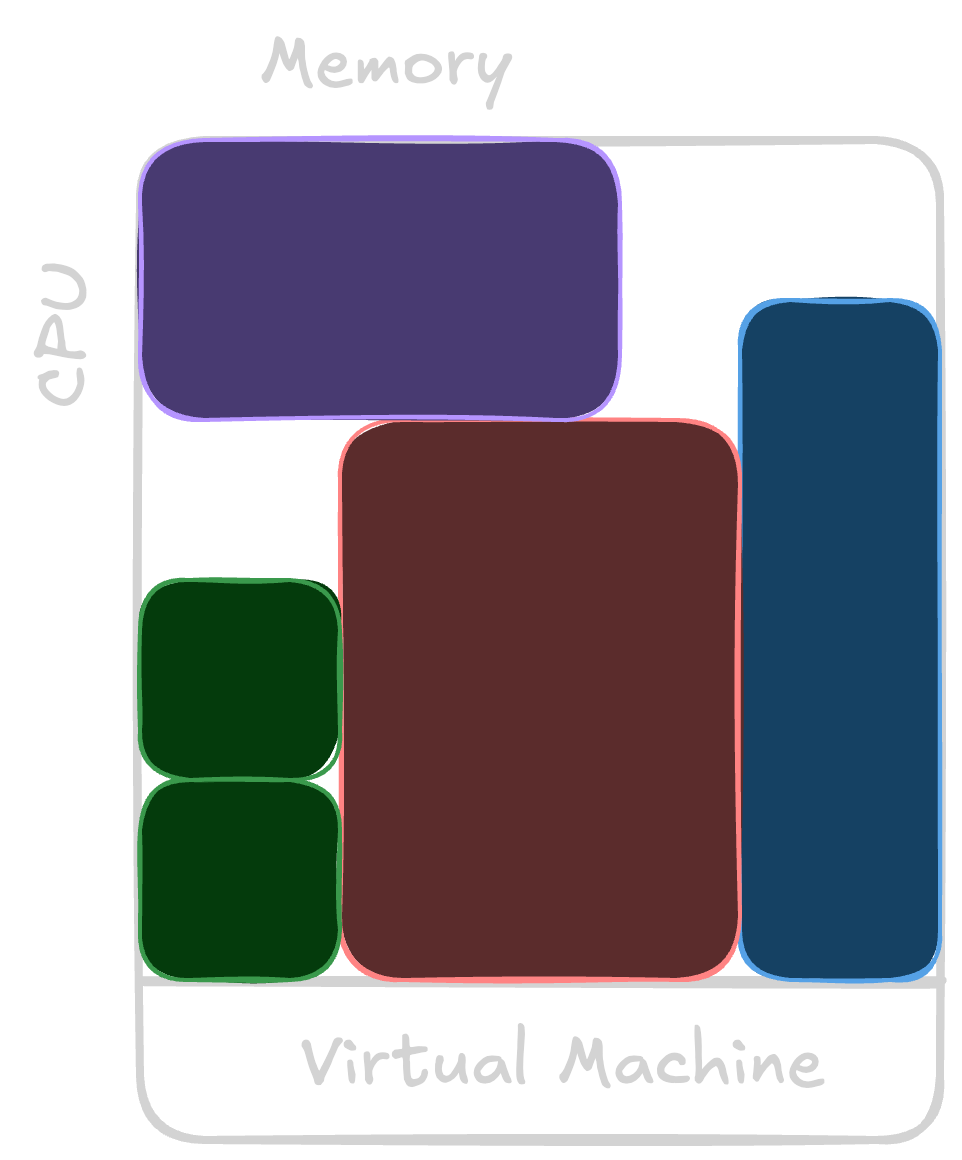

VMs allowed us to abstract away much of the hardware sizing or failure problems, as well as share hardware across many users safely. Limiting applications to a single set of resources created a deployment pattern of “virtual appliances,” where an application would have only the files it’d need to run inside of the image.1 Handy new capabilities like disk snapshots for fast failure recovery, resizing VMs to give it more hardware, and virtualized network tools to run less cable saved effort. It became less likely that a bad upgrade nerfing large swaths of my infrastructure too.

While many hardships from running on bare metal were fixed, resource management didn’t go away. Resizing these “not-quite-physical boxes” wasn’t much less painful than adding hardware.

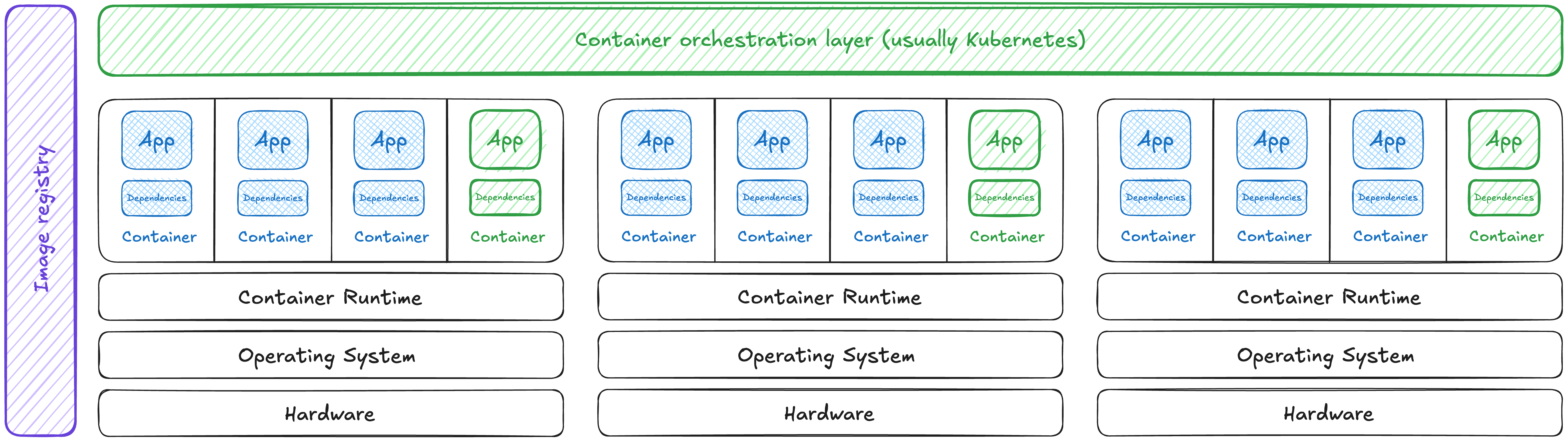

Containers allowed us the best of both worlds, at a cost. We got to isolate our dependencies by process, like we did in a virtual machine. We also got to efficiently “pack” workloads onto our hardware resources. For applications so large they cannot fit on a single machine, we can orchestrate any part of this application across many hardware instances. However, the security model of this is much more similar to sharing a bunch of hardware than it is to running virtual machines.

Cybersecurity or compliance frameworks didn’t all make this shift from VMs back towards securing multi-tenanted applications sharing resources. Many teams I talk to still use a threat model for virtual machines for their containerized workloads.

It’s easy to make fun of having junior engineers fill in point-in-time spreadsheets of 1000+ controls on each container. Apart from being expensive and time-consuming, it’s dangerously slow and inaccurate when containers only provide an illusion of isolation.

Containers are a handy abstraction

it’s the one and only blue ball machine

it’s the one and only blue ball machine

Abstractions are engineering trade-offs. Containers provide an easy way to bundle an application’s dependencies with it, agnostic of whatever it runs on or with. The way containerized systems are discussed, regulated, and used is as though they’re “virtual machines with less overhead”, a fallacy I enjoy debunking on the regular. 😇

Without deliberately understanding what those tradeoffs are, you’ll end up with a gigantic system to understand and an incomplete ability to operate and secure it.

Where we’re going

Let’s pull apart the key security risks at each layer of the container ecosystem, shown above. At each point, let’s get some actionable insights for assessing and mitigating threats, as well as a few of my favorite “gotchas” that I’ve found in the field.

Up next: Okay so … what exactly is a container, anyways? (back to the summary)

Footnotes

-

Virtual appliances are still the most common application deployment pattern I see. I don’t know if this is selection bias, as I only work with public sector and adjacent big companies in heavily-regulated enterprises. ↩