Container host shenanigans

Last time, we learned that containers are Linux processes with a few extra guardrails in what’s a container, anyways. These processes need a Linux-based system to run on. Let’s take a look at the risks across hardware and the operating system on it.

These controls are just as true for a managed service as it is for bare metal in your datacenter. While universally applicable, how to understand and mitigate those risks will vary.

Let’s pull that apart a few different ways and map the problems to possible solutions.

This is part of a series put together from client-facing conversations, conference talks, workshops, and more over the past 10 years of asking folks to stop doing silly things in containers. Here’s the full series, assembled over Autumn 2025. 🍂

Physical access

✨ If someone has physical access to your hardware, there is nothing that I can say or do to help you.1 ✨

Physical access is it’s own type of threat modeling for a reason. It’s genuinely a different discipline than what most cybersecurity frameworks were designed to deal with. Key physical threats include

- Who has access to your hardware?

- How is the hardware secured? Is it in a locked cage? Who has the keys?

- Is access to the hardware logged? How often is that log reviewed?

- What does power or network failover look like?

- … and more …

It’s all fun to talk about the forbidden 110V to CAT5 adapter pictured, but if someone can plug in that abomination, it’ll permanently disable at least that port and probably more. Finding a random device on the network is not a pleasant surprise and much more common for malice than the silly destruction cable. ⚡️

📚 Story time! When I was working in a managed services organization, one of my customers had a couple 42U racks (think 6’ tall big cabinet cases) of hardware running in a locked closet. One Sunday, it went down for about an hour and came back up. The same thing happened again two weeks later, a few hours from the first outage. I decided to run a few errands close to that side of town in two week’s time. Sure enough, I got paged that afternoon.

The office cleaning crew plugged a new floor wax machine on a circuit that was shared with one of the cabinets. It’d trip the circuit breaker, forcing the equipment offline once the little battery power system depleted itself. The crew would usually notice that it’d trip, then flip it back on. That circuit wasn’t supposed to be accessible outside of the locked server closet, an easy fix for an electrician to handle. While there was no malice, it caused thousands of dollars in reduced availability in delays to restart long-running jobs.

First, understand the availability needs of your system. If you’re using a cloud provider, many/most/maybe-all of these are already handled for you. Consult your vendor’s documentation for information. If you’re owning and running hardware in-house, many compliance frameworks have a section for physical access controls.2

Host component vulns

More software installed = more software to patch for security

This is true of a container host just as much as any other system. Modern Linux operating systems can be so very many things - databases, web servers, application servers, load balancers, a general-purpose desktop with a keyboard and monitor, a printer server, control industrial equipment … and thousands upon thousands of other tasks. Each of these tasks adds complexity to each component’s upgrade, attack surface, and maintenance costs.

Only install what’s minimally needed to do what’s needed for one task. Use a purpose-built operating system like CoreOS or the invisible-to-users operating system that underpins managed Kubernetes offerings in the cloud like CBL-Mariner (Microsoft, link ). Many of these are also immutable, meaning the system files are read-only by default to further restrict what mischief we can get into on escape.

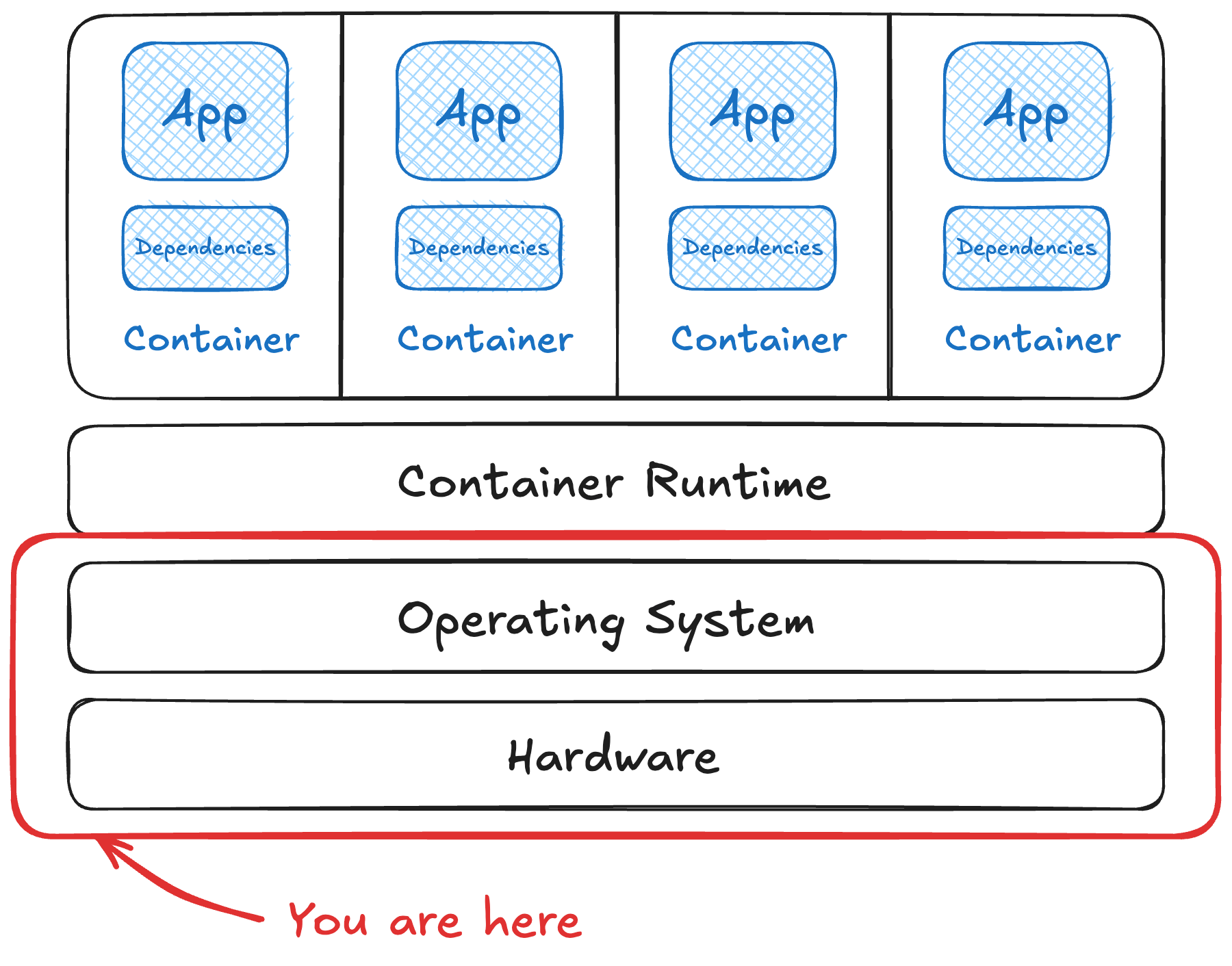

Sharing a kernel

This is what cotenanting containers looks like …

Containers rely on the host’s kernel to manage resources. A flaw in that kernel or how it’s configured to share resources is exactly this problem.

The (now years old) “dirty COW” vulnerability (CVE-2016-5195 ) is a textbook example of this risk. It relies a race condition in how the kernel frees memory under certain conditions - for a container escape, you can use that behavior to rewrite core system files like /etc/passwd for privilege escalation. A container runtime doesn’t isolate a process from this, either.

I’m not sure kernel bugs should keep you up at night so long as you’re regularly and quickly patching the software you ship. It also helps manage potential harms to isolate high-risk workloads such as CI/CD systems. Kernel bugs are scary, but there are so many eyes on this project that it’s likely easier to just stay up-to-date.

Improper user access rights

User management, authentication, and authorization is hard to do. Messing that up is a lot simpler to do. Doing as little as possible on the host means less to maintain. A common place to find this pattern is folks that run containers on a system, but also have a bunch of static configurations for

- a user to SSH into the system (usually a security scanner of some sort)

- also use non-container workloads on the same system

- perform maintenance or deploy containers directly on each node

These tasks aren’t wrong in and of itself but it gives you 10x the amount of surface area to secure. Now you’re handling authentication (usually PAM ), probably SSH and TTY sessions, and likely connectivity to an identity provider too. All of these systems need to be configured, maintained, and monitored.

😈 Exploit example This pattern of logging into user accounts that exist on the node as well as the container is an easy way out of a container. We used this, in part, in our user enumeration exercises and in our runtime user group escape. It’s also helpful when writing to the host’s filesystem, so that we know what user should own the file permissions.

It’s much simpler to not have any user accounts beyond what the runtime is using. Having nodes with a single purpose, running containers, makes that an easier task. This is why managed Kubernetes services (eg, AWS EKS or Azure AKS) or run-container-as-a-service platforms (AWS ECS or Azure Container Apps) don’t even offer the ability to touch the node.

Host file system tampering

It’s tempting to share files from the host into a container because it’s easy. Setting up a network share or other resource isn’t as simple as passing storage into the container. It’s a bad idea to do this, since it’d let that process modify the host it’s running on.

😈 Exploit example We relied on exactly this exploit in our persisting through persistant storage escape, recorded below.

If you need to store files in a container, configure the appropriate storage off the host file system instead. This could be as straightforward as a volume in your runtime, or persistent or ephemeral storage in Kubernetes. Doing this puts your runtime and/or orchestrator on point for managing its’ own storage as you define it. This is also how immutable node operating systems further limit the amount of mischief that can be done by limiting changing any important files.

tl;dr

Container hosts are a great target, but it’s not impossible to secure. Doing one thing well is great security advice here. Managed services handle most of this headache for you, for a cost. But even if you’re running your own hardware, following these basics will save you from becoming someone’s afternoon entertainment.

Up next: Fun times in runtimes! (back to the summary)

Footnotes

-

Having trusted compute, but not trusting the hardware it runs on, is exactly the problem that confidential computing is trying to solve for. The Confidential Computing Consortium has a ton of resources to learn more. ↩

-

In the absence of guidance, NIST 800-53 rev. 5 has a section called

ACfor access control. ↩